Kamakshaiah Musunuru is a open source software evangelist and full stack developer. He is academic at GITAM (Deemed to be) University, Visakhapatnam, India, and also a freelance trainer of data science and analytics. His teaching interests are MIS, Data Science, Business Analytics including functional analytics such as marketing analytics, finance analytics etc. He teaches subjects like R for business analytics, Python for business anlaytics, IoT, Big Data analytics using Hadoop, etc. His research interests are related to health care management, educational management, food & agriculture business, utilization of open source software for business applications. He is advocate of open source software. He has conducted few workshops and seminars on open source software tools and their utility for business. He lived in Ethiopia, North-East Africa, for two years to teach business management and visited Bangkok, Thailand, on research assignments.

Artificial intelligence (AI) is intelligence demonstrated by machines, unlike the natural intelligence displayed by humans and animals, which involves consciousness and emotionality. The distinction between the former and the latter categories is often revealed by the acronym chosen. ’Strong’ AI is usually labelled as AGI (Artificial General Intelligence) while attempts to emulate ’natural’ intelligence have been called ABI (Artificial Biological Intelligence). Leading AI textbooks define the field as the study of “intelligent agents”: any device that perceives its environment and takes actions that maximize its chance of successfully achieving its goals. Colloquially, the term “artificial intelligence” is often used to describe machines (or computers) that mimic ”cognitive” functions that humans associate with the human mind, such as “learning” and “problem solving”.

As machines become increasingly capable, tasks considered to require “intelligence” are often removed from the definition of AI, a phenomenon known as the AI effect. A quip in Tesler’s Theorem says ”AI is whatever hasn’t been done yet.” 1 For instance, optical character recognition is frequently excluded from things considered to be AI, having become a routine technology. Modern machine capabilities generally classified as AI include successfully understanding human speech, competing at the highest level in strategic game systems (such as chess and Go), and also imperfect-information games like poker, self-driving cars, intelligent routing in content delivery networks, and military simulations.

Artificial intelligence was founded as an academic discipline in 1955, and in the years since has experienced several waves of optimism, followed by disappointment and the loss of funding (known as an “AI winter”), followed by new approaches, success and renewed funding. 2 After AlphaGo successfully defeated a professional Go player in 2015, artificial intelligence once again attracted widespread global attention.3 For most of its history, AI research has been divided into sub-fields that often fail to communicate with each other. These sub-fields are based on technical considerations, such as particular goals (e.g. “robotics” or “machine learning”), the use of particular tools (“logic” or artificial neural networks), or deep philosophical differences. Sub-fields have also been based on social factors (particular institutions or the work of particular researchers).

The traditional problems (or goals) of AI research include reasoning, knowledge representation, planning, learning, natural language processing, perception and the ability to move and manipulate objects. General intelligence is among the field’s long-term goals. Approaches include statistical methods, computational intelligence, and traditional symbolic AI. Many tools are used in AI, including versions of search and mathematical optimization, artificial neural networks, and methods based on statistics, probability and economics. The AI field draws upon computer science, information engineering, mathematics, psychology, linguistics, philosophy, and many other fields.

The field was founded on the assumption that human intelligence “can be so precisely described that a machine can be made to simulate it”. This raises philosophical arguments about the mind and the ethics of creating artificial beings endowed with human-like intelligence. These issues have been explored by myth, fiction and philosophy since antiquity. Some people also consider AI to be a danger to humanity if it progresses unabated. Others believe that AI, unlike previous technological revolutions, will create a risk of mass unemployment.

In the twenty-first century, AI techniques have experienced a resurgence following concurrent advances in computer power, large amounts of data, and theoretical understanding; and AI techniques have become an essential part of the technology industry, helping to solve many challenging problems in computer science, software engineering and operations research.

Graph theory is the study of graphs, which are mathematical structures used to model pairwise relations between objects. A graph in this context is made up of vertices (also called nodes or points) which are connected by edges (also called links or lines). A distinction is made between undirected graphs, where edges link two vertices symmetrically, and directed graphs, where edges link two vertices asymmetrically; see Graph (discrete mathematics) for more detailed definitions and for other variations in the types of graph that are commonly considered. Graphs are one of the prime objects of study in discrete mathematics.

Definitions in graph theory vary. The following are some of the more basic ways of defining graphs and related mathematical structures. In one restricted but very common sense of the term, a graph is an ordered pair comprising:

, a set of vertices (also called nodes or points);

, a set of edges (also called links or lines), which are unordered pairs of vertices (that is, an edge is associated with two distinct vertices).

To avoid ambiguity, this type of object may be called precisely an undirected simple graph.

In the edge , the vertices and are called the endpoints of the edge. The edge is said to join and and to be incident on and on . A vertex may exist in a graph and not belong to an edge. Multiple edges, not allowed under the definition above, are two or more edges that join the same two vertices.

In one more general sense of the term allowing multiple edges, a graph is an ordered triple comprising:

, a set of vertices (also called nodes or points);

, a set of edges (also called links or lines);

, an incidence function mapping every edge to an unordered pair of vertices (that is, an edge is associated with two distinct vertices).

To avoid ambiguity, this type of object may be called precisely an undirected multigraph. A loop is an edge that joins a vertex to itself. Graphs as defined in the two definitions above cannot have loops, because a loop joining a vertex to itself is the edge (for an undirected simple graph) or is incident on (for an undirected multigraph) which is not in . So to allow loops the definitions must be expanded. For undirected simple graphs, the definition of should be modified to . For undirected multigraphs, the definition of should be modified to . To avoid ambiguity, these types of objects may be called undirected simple graph permitting loops and undirected multigraph permitting loops, respectively.

and are usually taken to be finite, and many of the well-known results are not true (or are rather different) for infinite graphs because many of the arguments fail in the infinite case. Moreover, is often assumed to be non-empty, but is allowed to be the empty set. The order of a graph is , its number of vertices. The size of a graph is , its number of edges. The degree or valency of a vertex is the number of edges that are incident to it, where a loop is counted twice. The degree of a graph is the maximum of the degrees of its vertices. In an undirected simple graph of order n, the maximum degree of each vertex is n ? 1 and the maximum size of the graph is n(n - 1)/2.

The edges of an undirected simple graph permitting loops induce a symmetric homogeneous relation on the vertices of that is called the adjacency relation of . Specifically, for each edge , its endpoints and are said to be adjacent to one another, which is denoted .

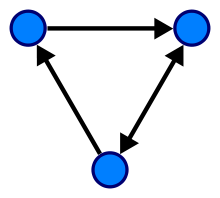

A directed graph or digraph is a graph in which edges have orientations. In one restricted but very common sense of the term, a directed graph is an ordered pair comprising:

, a set of vertices (also called nodes or points);

, a set of edges (also called directed edges, directed links, directed lines, arrows or arcs) which are ordered pairs of vertices (that is, an edge is associated with two distinct vertices).

To avoid ambiguity, this type of object may be called precisely a directed simple graph. In the edge directed from to , the vertices and are called the endpoints of the edge, the tail of the edge and the head of the edge. The edge is said to join and and to be incident on and on . A vertex may exist in a graph and not belong to an edge. The edge is called the inverted edge of . Multiple edges, not allowed under the definition above, are two or more edges with both the same tail and the same head.

In one more general sense of the term allowing multiple edges, a directed graph is an ordered triple comprising:

, a set of vertices (also called nodes or points);

, a set of edges (also called directed edges, directed links, directed lines, arrows or arcs); , an incidence function mapping every edge to an ordered pair of vertices (that is, an edge is associated with two distinct vertices).

To avoid ambiguity, this type of object may be called precisely a directed multigraph. A loop is an edge that joins a vertex to itself. Directed graphs as defined in the two definitions above cannot have loops, because a loop joining a vertex to itself is the edge (for a directed simple graph) or is incident on (for a directed multigraph) which is not in . So to allow loops the definitions must be expanded. For directed simple graphs, the definition of should be modified to . For directed multigraphs, the definition of should be modified to . To avoid ambiguity, these types of objects may be called precisely a directed simple graph permitting loops and a directed multigraph permitting loops (or a quiver) respectively. The edges of a directed simple graph permitting loops is a homogeneous relation on the vertices of that is called the adjacency relation of . Specifically, for each edge , its endpoints and are said to be adjacent to one another, which is denoted .

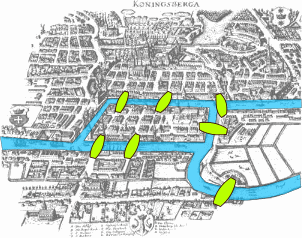

The paper written by Leonhard Euler on the Seven Bridges of and published in 1736 is regarded as the first paper in the history of graph theory. 4 This paper, as well as the one written by Vandermonde on the knight problem, carried on with the analysis situs initiated by Leibniz. Euler’s formula relating the number of edges, vertices, and faces of a convex polyhedron was studied and generalized by Cauchy and L’Huilier, and represents the beginning of the branch of mathematics known as topology.

More than one century after Euler’s paper on the bridges of and while Listing was introducing the concept of topology, Cayley was led by an interest in particular analytical forms arising from differential calculus to study a particular class of graphs, the trees. This study had many implications for theoretical chemistry. The techniques he used mainly concern the enumeration of graphs with particular properties. Enumerative graph theory then arose from the results of Cayley and the fundamental results published by P between 1935 and 1937. These were generalized by De Bruijn in 1959. Cayley linked his results on trees with contemporary studies of chemical composition. The fusion of ideas from mathematics with those from chemistry began what has become part of the standard terminology of graph theory.

In particular, the term “graph” was introduced by Sylvester in a paper published in 1878 in Nature, where he draws an analogy between “quantic invariants” and “co-variants” of algebra and molecular diagrams:

“[...] Every invariant and co-variant thus becomes expressible by a graph precisely identical with a Kekul diagram or chemicograph. [...] I give a rule for the geometrical multiplication of graphs, i.e. for constructing a graph to the product of in- or co-variants whose separate graphs are given. [...]”

The first textbook on graph theory was written by Ds , and published in 1936. 5 Another book by Frank Harary, published in 1969, was ”considered the world over to be the definitive textbook on the subject”, and enabled mathematicians, chemists, electrical engineers and social scientists to talk to each other. Harary donated all of the royalties to fund the P Prize.6

One of the most famous and stimulating problems in graph theory is the four color problem: “Is it true that any map drawn in the plane may have its regions colored with four colors, in such a way that any two regions having a common border have different colors?” This problem was first posed by Francis Guthrie in 1852 and its first written record is in a letter of De Morgan addressed to Hamilton the same year. Many incorrect proofs have been proposed, including those by Cayley, Kempe, and others. The study and the generalization of this problem by Tait, Heawood, Ramsey and Hadwiger led to the study of the colorings of the graphs embedded on surfaces with arbitrary genus. Tait’s reformulation generated a new class of problems, the factorization problems, particularly studied by Petersen and . The works of Ramsey on colorations and more specially the results obtained by Turn 1941 was at the origin of another branch of graph theory, extremal graph theory.

The four color problem remained unsolved for more than a century. In 1969 Heinrich Heesch published a method for solving the problem using computers. A computer-aided proof produced in 1976 by Kenneth Appel and Wolfgang Haken makes fundamental use of the notion of “discharging” developed by Heesch. The proof involved checking the properties of 1,936 configurations by computer, and was not fully accepted at the time due to its complexity. A simpler proof considering only 633 configurations was given twenty years later by Robertson, Seymour, Sanders and Thomas.

The autonomous development of topology from 1860 and 1930 fertilized graph theory back through the works of Jordan, Kuratowski and Whitney. Another important factor of common development of graph theory and topology came from the use of the techniques of modern algebra. The first example of such a use comes from the work of the physicist Gustav Kirchhoff, who published in 1845 his Kirchhoff’s circuit laws for calculating the voltage and current in electric circuits.

The introduction of probabilistic methods in graph theory, especially in the study of Erd?s and of the asymptotic probability of graph connectivity, gave rise to yet another branch, known as random graph theory, which has been a fruitful source of graph-theoretic results.

Graphs can be used to model many types of relations and processes in physical, biological, social and information systems. Many practical problems can be represented by graphs. Emphasizing their application to real-world systems, the term network is sometimes defined to mean a graph in which attributes (e.g. names) are associated with the vertices and edges, and the subject that expresses and understands the real-world systems as a network is called network science.

In computer science, graphs are used to represent networks of communication, data organization, computational devices, the flow of computation, etc. For instance, the link structure of a website can be represented by a directed graph, in which the vertices represent web pages and directed edges represent links from one page to another. A similar approach can be taken to problems in social media, travel, biology, computer chip design, mapping the progression of neuro-degenerative diseases, and many other fields. The development of algorithms to handle graphs is therefore of major interest in computer science. The transformation of graphs is often formalized and represented by graph rewrite systems. Complementary to graph transformation systems focusing on rule-based in-memory manipulation of graphs are graph databases geared towards transaction-safe, persistent storing and querying of graph-structured data.

Graph-theoretic methods, in various forms, have proven particularly useful in linguistics, since natural language often lends itself well to discrete structure. Traditionally, syntax and compositional semantics follow tree-based structures, whose expressive power lies in the principle of compositionality, modeled in a hierarchical graph. More contemporary approaches such as head-driven phrase structure grammar model the syntax of natural language using typed feature structures, which are directed acyclic graphs. Within lexical semantics, especially as applied to computers, modeling word meaning is easier when a given word is understood in terms of related words; semantic networks are therefore important in computational linguistics. Still, other methods in phonology (e.g. optimality theory, which uses lattice graphs) and morphology (e.g. finite-state morphology, using finite-state transducers) are common in the analysis of language as a graph. Indeed, the usefulness of this area of mathematics to linguistics has borne organizations such as TextGraphs, as well as various ’Net’ projects, such as WordNet, VerbNet, and others.

Graph theory is also used to study molecules in chemistry and physics. In condensed matter physics, the three-dimensional structure of complicated simulated atomic structures can be studied quantitatively by gathering statistics on graph-theoretic properties related to the topology of the atoms. Also, ”the Feynman graphs and rules of calculation summarize quantum field theory in a form in close contact with the experimental numbers one wants to understand.” In chemistry a graph makes a natural model for a molecule, where vertices represent atoms and edges bonds. This approach is especially used in computer processing of molecular structures, ranging from chemical editors to database searching. In statistical physics, graphs can represent local connections between interacting parts of a system, as well as the dynamics of a physical process on such systems. Similarly, in computational neuroscience graphs can be used to represent functional connections between brain areas that interact to give rise to various cognitive processes, where the vertices represent different areas of the brain and the edges represent the connections between those areas. Graph theory plays an important role in electrical modeling of electrical networks, here, weights are associated with resistance of the wire segments to obtain electrical properties of network structures. Graphs are also used to represent the micro-scale channels of porous media, in which the vertices represent the pores and the edges represent the smaller channels connecting the pores. Chemical graph theory uses the molecular graph as a means to model molecules. Graphs and networks are excellent models to study and understand phase transitions and critical phenomena. Removal of nodes or edges lead to a critical transition where the network breaks into small clusters which is studied as a phase transition. This breakdown is studied via percolation theory.

Graph theory is also widely used in sociology as a way, for example, to measure actors’ prestige or to explore rumor spreading, notably through the use of social network analysis software. Under the umbrella of social networks are many different types of graphs. Acquaintanceship and friendship graphs describe whether people know each other. Influence graphs model whether certain people can influence the behavior of others. Finally, collaboration graphs model whether two people work together in a particular way, such as acting in a movie together.

Likewise, graph theory is useful in biology and conservation efforts where a vertex can represent regions where certain species exist (or inhabit) and the edges represent migration paths or movement between the regions. This information is important when looking at breeding patterns or tracking the spread of disease, parasites or how changes to the movement can affect other species. Graphs are also commonly used in molecular biology and genomics to model and analyse datasets with complex relationships. For example, graph-based methods are often used to ’cluster’ cells together into cell-types in single-cell transcriptome analysis. Another use is to model genes or proteins in a pathway and study the relationships between them, such as metabolic pathways and gene regulatory networks. Evolutionary trees, ecological networks, and hierarchical clustering of gene expression patterns are also represented as graph structures. Graph-based methods are pervasive that researchers in some fields of biology and these will only become far more widespread as technology develops to leverage this kind of high-throughout multidimensional data. Graph theory is also used in connectomics; nervous systems can be seen as a graph, where the nodes are neurons and the edges are the connections between them.

In mathematics, graphs are useful in geometry and certain parts of topology such as knot theory. Algebraic graph theory has close links with group theory. Algebraic graph theory has been applied to many areas including dynamic systems and complexity.

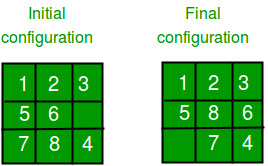

State space search is a process used in the field of computer science, including artificial intelligence (AI), in which successive configurations or states of an instance are considered, with the intention of finding a goal state with the desired property.

Problems are often modelled as a state space, a set of states that a problem can be in. The set of states forms a graph where two states are connected if there is an operation that can be performed to transform the first state into the second.

State space search often differs from traditional computer science search methods because the state space is implicit: the typical state space graph is much too large to generate and store in memory. Instead, nodes are generated as they are explored, and typically discarded thereafter. A solution to a combinatorial search instance may consist of the goal state itself, or of a path from some initial state to the goal state.

In state space search, a state space is formally represented as a tuple , in which:

is the set of all possible states;

is the set of possible actions, not related to a particular state but regarding all the state space;

is the function that establish which action is possible to perform in a certain state;

is the function that returns the state reached performing action in state

is the cost of performing an action in state . In many state spaces is a constant, but this is not true in general.

According to Poole and Mack worth, the following are uninformed state-space search methods, meaning that they do not have any prior information about the goal’s location.

Traditional depth-first search

Breadth-first search

Iterative deepening

Lowest-cost-first search

Some algorithms take into account information about the goal node’s location in the form of a heuristic function. Poole and Mackworth cite the following examples as informed search algorithms:

Informed/Heuristic breadth-first search

Greedy best-first search

A* search

Depth-first search (DFS) is an algorithm for traversing or searching tree or graph data structures. The algorithm starts at the root node (selecting some arbitrary node as the root node in the case of a graph) and explores as far as possible along each branch before backtracking. A version of depth-first search was investigated in the century by French mathematician Charles Pierre Trux as a strategy for solving mazes. 7 8

| Class Search | algorithm |

| Data structure | Graph |

| Worst-case performance | for explicit |

| graphs traversed without | |

| repetition, for | |

| implicit graphs with branching | |

| factor searched to depth | |

| Worst-case space complexity | if entire graph is |

| traversed without repetition, | |

| (longest path length | |

| searched) = for implicit | |

| graphs without elimination | |

| of duplicate nodes | |

The time and space analysis of DFS differs according to its application area. In theoretical computer science, DFS is typically used to traverse an entire graph, and takes time , 9 where is the number of vertices and the number of edges. This is linear in the size of the graph. In these applications it also uses space in the worst case to store the stack of vertices on the current search path as well as the set of already-visited vertices. Thus, in this setting, the time and space bounds are the same as for breadth-first search and the choice of which of these two algorithms to use depends less on their complexity and more on the different properties of the vertex orderings the two algorithms produce.

For applications of DFS in relation to specific domains, such as searching for solutions in artificial intelligence or web-crawling, the graph to be traversed is often either too large to visit in its entirety or infinite (DFS may suffer from non-termination). In such cases, search is only performed to a limited depth; due to limited resources, such as memory or disk space, one typically does not use data structures to keep track of the set of all previously visited vertices. When search is performed to a limited depth, the time is still linear in terms of the number of expanded vertices and edges (although this number is not the same as the size of the entire graph because some vertices may be searched more than once and others not at all) but the space complexity of this variant of DFS is only proportional to the depth limit, and as a result, is much smaller than the space needed for searching to the same depth using breadth-first search. For such applications, DFS also lends itself much better to heuristic methods for choosing a likely-looking branch. When an appropriate depth limit is not known a priori, iterative deepening depth-first search applies DFS repeatedly with a sequence of increasing limits. In the artificial intelligence mode of analysis, with a branching factor greater than one, iterative deepening increases the running time by only a constant factor over the case in which the correct depth limit is known due to the geometric growth of the number of nodes per level. DFS may also be used to collect a sample of graph nodes. However, incomplete DFS, similarly to incomplete BFS, is biased towards nodes of high degree.

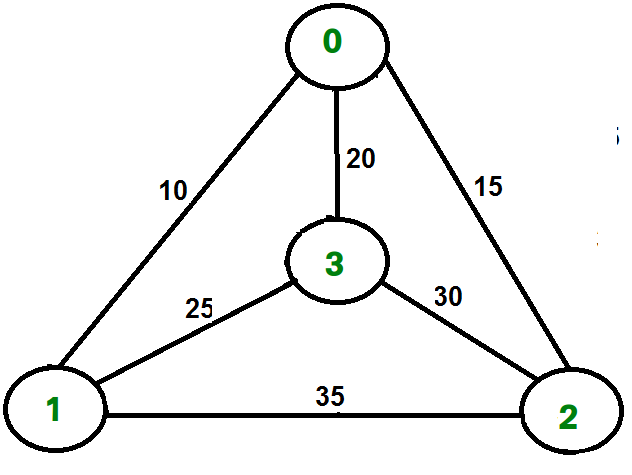

For the following graph:

A depth-first search starting at the node , assuming that the left edges in the shown graph are chosen before right edges, and assuming the search remembers previously visited nodes and will not repeat them (since this is a small graph), will visit the nodes in the following order: . The edges traversed in this search form a tree, a structure with important applications in graph theory. Performing the same search without remembering previously visited nodes results in visiting the nodes in the order , etc. forever, caught in the cycle and never reaching or . Iterative deepening is one technique to avoid this infinite loop and would reach all nodes.

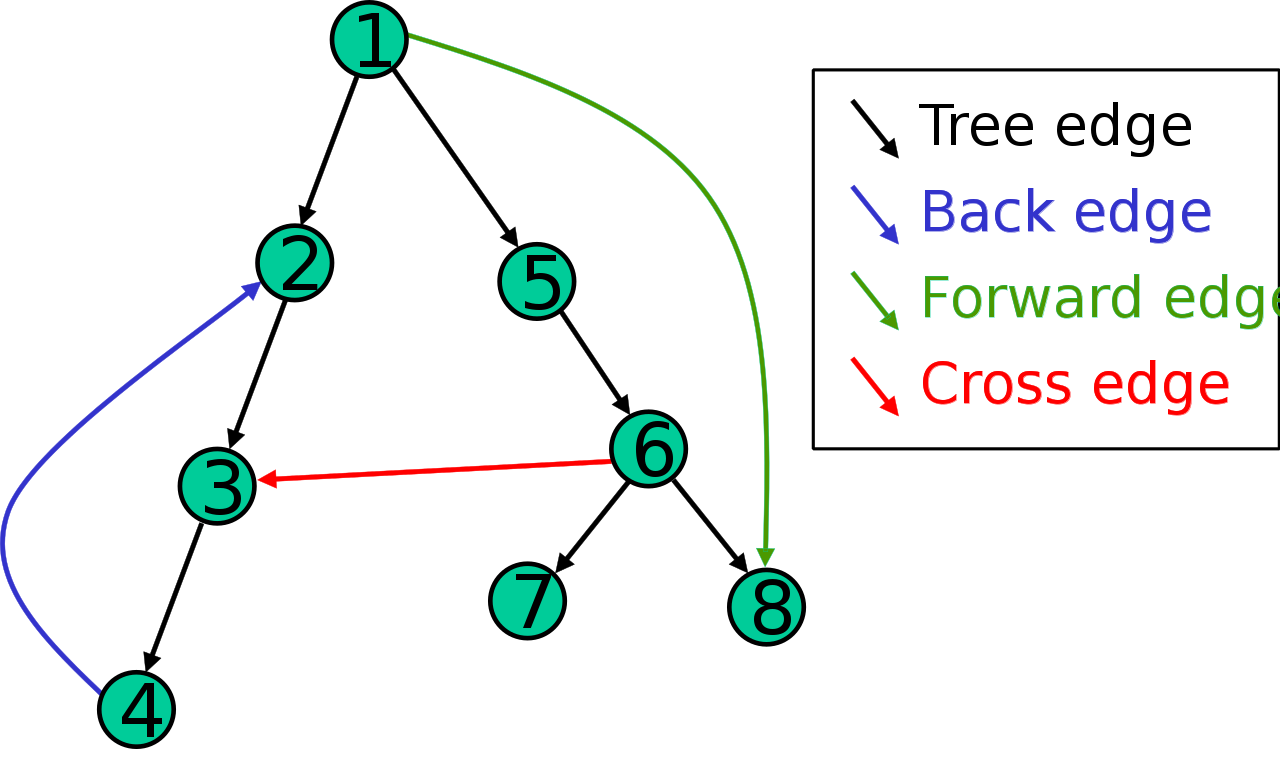

The result of a depth-first search of a graph can be conveniently described in terms of a spanning tree of the vertices reached during the search. Based on this spanning tree, the edges of the original graph can be divided into three classes: forward edges, which point from a node of the tree to one of its descendants, back edges, which point from a node to one of its ancestors, and cross edges, which do neither. Sometimes tree edges, edges which belong to the spanning tree itself, are classified separately from forward edges. If the original graph is undirected then all of its edges are tree edges or back edges.

It is also possible to use depth-first search to linearly order the vertices of a graph or tree. There are four possible ways of doing this:

A preordering is a list of the vertices in the order that they were first visited by the depth-first search algorithm. This is a compact and natural way of describing the progress of the search, as was done earlier in this article. A preordering of an expression tree is the expression in Polish notation.

A postordering is a list of the vertices in the order that they were last visited by the algorithm. A postordering of an expression tree is the expression in reverse Polish notation.

A reverse preordering is the reverse of a preordering, i.e. a list of the vertices in the opposite order of their first visit. Reverse preordering is not the same as postordering.

A reverse postordering is the reverse of a postordering, i.e. a list of the vertices in the opposite order of their last visit. Reverse postordering is not the same as preordering.

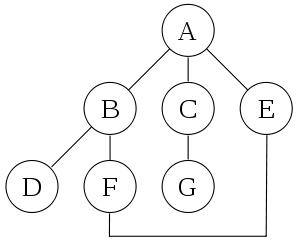

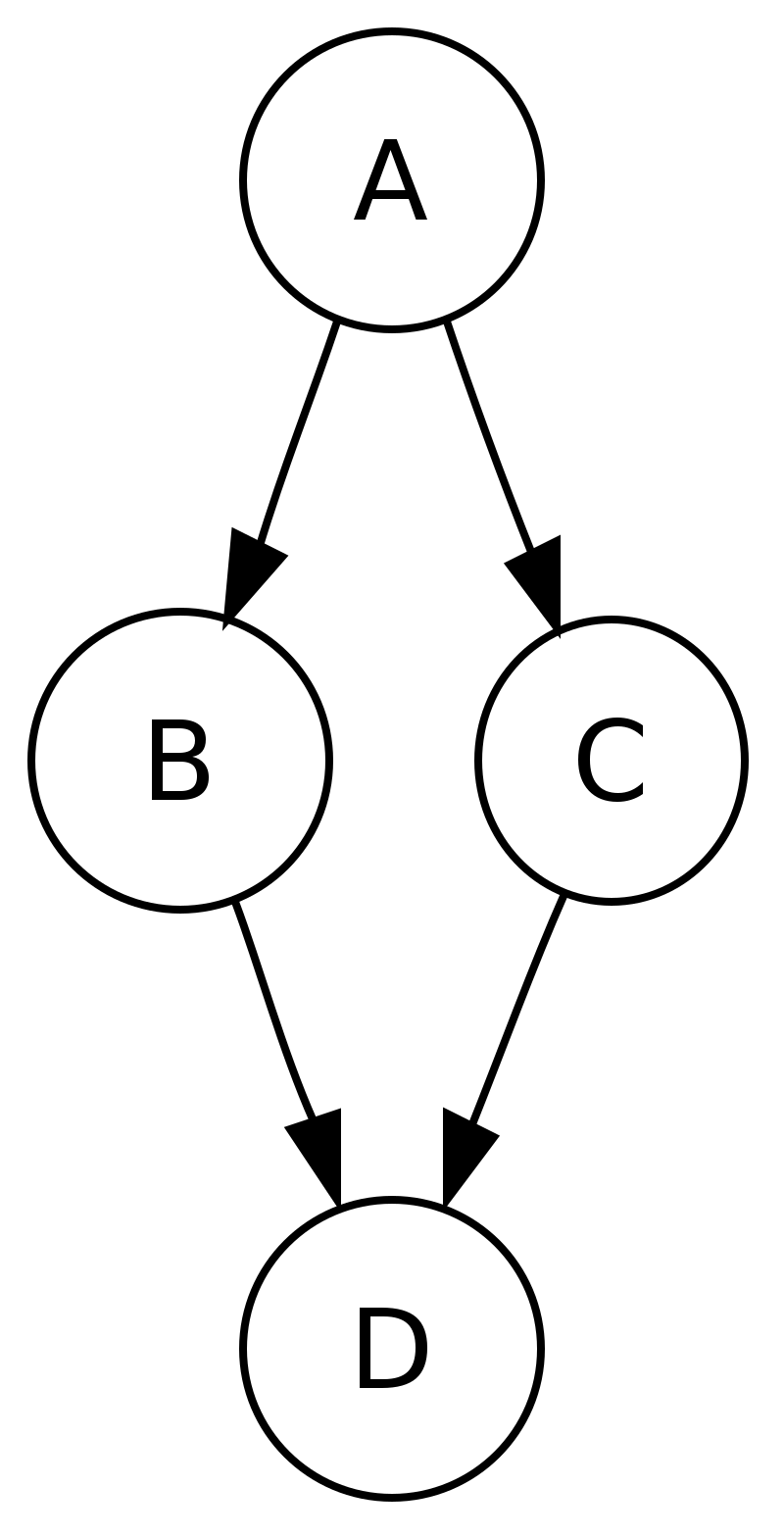

For binary trees there is additionally in-ordering and reverse in-ordering. For example, when searching the directed graph below beginning at node A, the sequence of traversals is either or (choosing to first visit B or C from A is up to the algorithm). Note that repeat visits in the form of backtracking to a node, to check if it has still unvisited neighbors, are included here (even if it is found to have none). Thus the possible preorderings are A B D C and A C D B, while the possible postorderings are and , and the possible reverse postorderings are and .

Reverse postordering produces a topological sorting of any directed acyclic graph. This ordering is also useful in control-flow analysis as it often represents a natural linearization of the control flows. The graph above might represent the flow of control in the code fragment below, and it is natural to consider this code in the order or but not natural to use the order or .

The computational complexity of DFS was investigated by John Reif. More precisely, given a graph , let be the ordering computed by the standard recursive DFS algorithm. This ordering is called the lexicographic depth-first search ordering. John Reif considered the complexity of computing the lexicographic depth-first search ordering, given a graph and a source. A decision version of the problem (testing whether some vertex u occurs before some vertex v in this order) is P-complete, meaning that it is “a nightmare for parallel processing”. 10

A depth-first search ordering (not necessarily the lexicographic one), can be computed by a randomized parallel algorithm in the complexity class RNC. As of 1997, it remained unknown whether a depth-first traversal could be constructed by a deterministic parallel algorithm, in the complexity class NC.

Algorithms that use depth-first search as a building block include:

Finding connected components.

Topological sorting.

Finding 2-(edge or vertex)-connected components.

Finding 3-(edge or vertex)-connected components.

Finding the bridges of a graph.

Generating words in order to plot the limit set of a group.

Finding strongly connected components.

Determining whether a species is closer to one species or another in a phylogenetic tree.

Planarity testing.

Solving puzzles with only one solution, such as mazes. (DFS can be adapted to find all solutions to a maze by only including nodes on the current path in the visited set.)

Maze generation may use a randomized depth-first search.

Finding biconnectivity in graphs.

Depth First Traversal (or Search) for a graph is similar to Depth First Traversal of a tree. The only catch here is, unlike trees, graphs may contain cycles (a node may be visited twice). To avoid processing a node more than once, use a boolean visited array.

Input n = 4, e = 6

Output DFS from vertex

Input: n = 4, e = 6

Output: DFS from vertex

Depth-first search is an algorithm for traversing or searching tree or graph data structures. The algorithm starts at the root node (selecting some arbitrary node as the root node in the case of a graph) and explores as far as possible along each branch before backtracking. So the basic idea is to start from the root or any arbitrary node and mark the node and move to the adjacent unmarked node and continue this loop until there is no unmarked adjacent node. Then backtrack and check for other unmarked nodes and traverse them. Finally, print the nodes in the path.

Below are implementations of simple Depth First Traversal. The Python implementation uses an adjacency list representation of graphs. STLs list container is used to store lists of adjacent nodes.

1# Python3 program to print DFS traversal

2# from a given given graph

3from collections import defaultdict

4

5# This class represents a directed graph using

6# adjacency list representation

7

8

9class Graph:

10

11 # Constructor

12 def __init__(self):

13

14 # default dictionary to store graph

15 self.graph = defaultdict(list)

16

17 # function to add an edge to graph

18 def addEdge(self, u, v):

19 self.graph[u].append(v)

20

21 # A function used by DFS

22 def DFSUtil(self, v, visited):

23

24 # Mark the current node as visited

25 # and print it

26 visited.add(v)

27 print(v, end=’ ’)

28

29 # Recur for all the vertices

30 # adjacent to this vertex

31 for neighbour in self.graph[v]:

32 if neighbour not in visited:

33 self.DFSUtil(neighbour, visited)

34

35 # The function to do DFS traversal. It uses

36 # recursive DFSUtil()

37 def DFS(self, v):

38

39 # Create a set to store visited vertices

40 visited = set()

41

42 # Call the recursive helper function

43 # to print DFS traversal

44 self.DFSUtil(v, visited)

45

46# Driver code

47

48

49# Create a graph given

50# in the above diagram

51g = Graph()

52g.addEdge(0, 1)

53g.addEdge(0, 2)

54g.addEdge(1, 2)

55g.addEdge(2, 0)

56g.addEdge(2, 3)

57g.addEdge(3, 3)

58

59print("Following is DFS from (starting from vertex 2)")

60g.DFS(2)

61

Time complexity: , where is the number of vertices and E is the number of edges in the graph.

Space Complexity: , since an extra visited array of size is required.

This will happen by handling a corner case. The above code traverses only the vertices reachable from a given source vertex. All the vertices may not be reachable from a given vertex, as in a Disconnected graph. To do a complete DFS traversal of such graphs, run DFS from all unvisited nodes after a DFS. The recursive function remains the same.

Create a recursive function that takes the index of the node and a visited array.

Mark the current node as visited and print the node.

Traverse all the adjacent and unmarked nodes and call the recursive function with the index of the adjacent node.

Run a loop from 0 to the number of vertices and check if the node is unvisited in the previous DFS, call the recursive function with the current node.

The below code explains procedure to handle a disconnected graph.

1# Python program to print DFS traversal for complete graph

2

3from collections import defaultdict

4

5# this class represents a directed graph using adjacency list representation

6

7class Graph:

8# Constructor

9def __init__(self):

10# default dictionary to store graph

11self.graph = defaultdict(list)

12

13# Function to add an edge to graph

14def addEdge(self, u, v):

15self.graph[u].append(v)

16# A function used by DFS

17

18def DFSUtil(self, v, visited):

19# Mark the current node as visited and print it

20visited.add(v)

21print(v,end=" ")

22

23# recur for all the vertices adjacent to this vertex

24for neighbour in self.graph[v]:

25if neighbour not in visited:

26self.DFSUtil(neighbour, visited)

27# The function to do DFS traversal. It uses recursive DFSUtil

28

29def DFS(self):

30# create a set to store all visited vertices

31visited = set()

32# call the recursive helper function to print DFS traversal starting from all

33# vertices one by one

34for vertex in self.graph:

35if vertex not in visited:

36self.DFSUtil(vertex, visited)

37# Driver code

38# create a graph given in the above diagram

39

40print("Following is Depth First Traversal \n")

41g = Graph()

42g.addEdge(0, 1)

43g.addEdge(0, 2)

44g.addEdge(1, 2)

45g.addEdge(2, 0)

46g.addEdge(2, 3)

47g.addEdge(3, 3)

48g.DFS()

49

Time complexity: , where is the number of vertices and is the number of edges in the graph.

Space Complexity: , since an extra visited array of size is required.

Breadth-first search (BFS) is an algorithm for searching a tree data structure for a node that satisfies a given property. It starts at the tree root and explores all nodes at the present depth prior to moving on to the nodes at the next depth level. Extra memory, usually a queue, is needed to keep track of the child nodes that were encountered but not yet explored.

For example, in a chess endgame a chess engine may build the game tree from the current position by applying all possible moves, and use breadth-first search to find a win position for white. Implicit trees (such as game trees or other problem-solving trees) may be of infinite size; breadth-first search is guaranteed to find a solution node if one exists.

In contrast, (plain) depth-first search, which explores the node branch as far as possible before backtracking and expanding other nodes, may get lost in an infinite branch and never make it to the solution node. Iterative deepening depth-first search avoids the latter drawback at the price of exploring the tree’s top parts over and over again. On the other hand, both depth-first algorithms get along without extra memory. Breadth-first search can be generalized to graphs, when the start node (sometimes referred to as a ’search key’) is explicitly given, and precautions are taken against following a vertex twice.

BFS and its application in finding connected components of graphs were invented in 1945 by Konrad Zuse, in his (rejected) Ph.D. thesis on the Plankalkl programming language, but this was not published until 1972. It was reinvented in 1959 by Edward F. Moore, who used it to find the shortest path out of a maze, and later developed by C. Y. Lee into a wire routing algorithm (published 1961).

| Class | Search algorithm |

| Data structure | Graph |

| Worst-case performance | |

| Worst-case space complexity | |

This non-recursive implementation is similar to the non-recursive implementation of depth-first search, but differs from it in two ways:

it uses a queue (First In First Out) instead of a stack and

it checks whether a vertex has been explored before enqueueing the vertex rather than delaying this check until the vertex is dequeued from the queue.

If G is a tree, replacing the queue of this breadth-first search algorithm with a stack will yield a depth-first search algorithm. For general graphs, replacing the stack of the iterative depth-first search implementation with a queue would also produce a breadth-first search algorithm, although a somewhat nonstandard one.

The Q queue contains the frontier along which the algorithm is currently searching. Nodes can be labelled as explored by storing them in a set, or by an attribute on each node, depending on the implementation. Note that the word node is usually interchangeable with the word vertex. The parent attribute of each node is useful for accessing the nodes in a shortest path, for example by backtracking from the destination node up to the starting node, once the BFS has been run, and the predecessors nodes have been set. Breadth-first search produces a so-called breadth first tree. You can see how a breadth first tree looks in the following example.

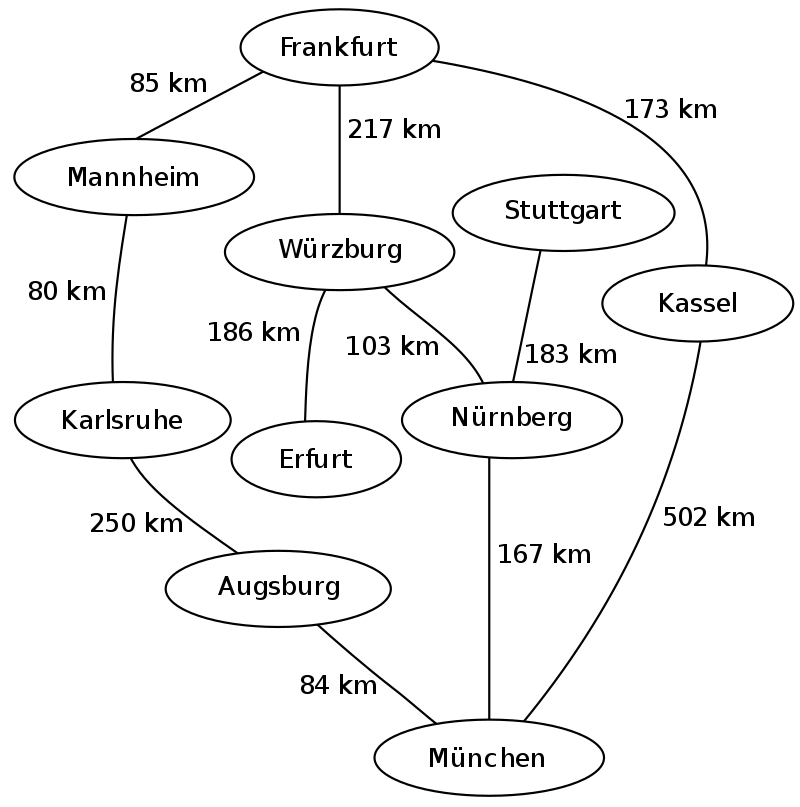

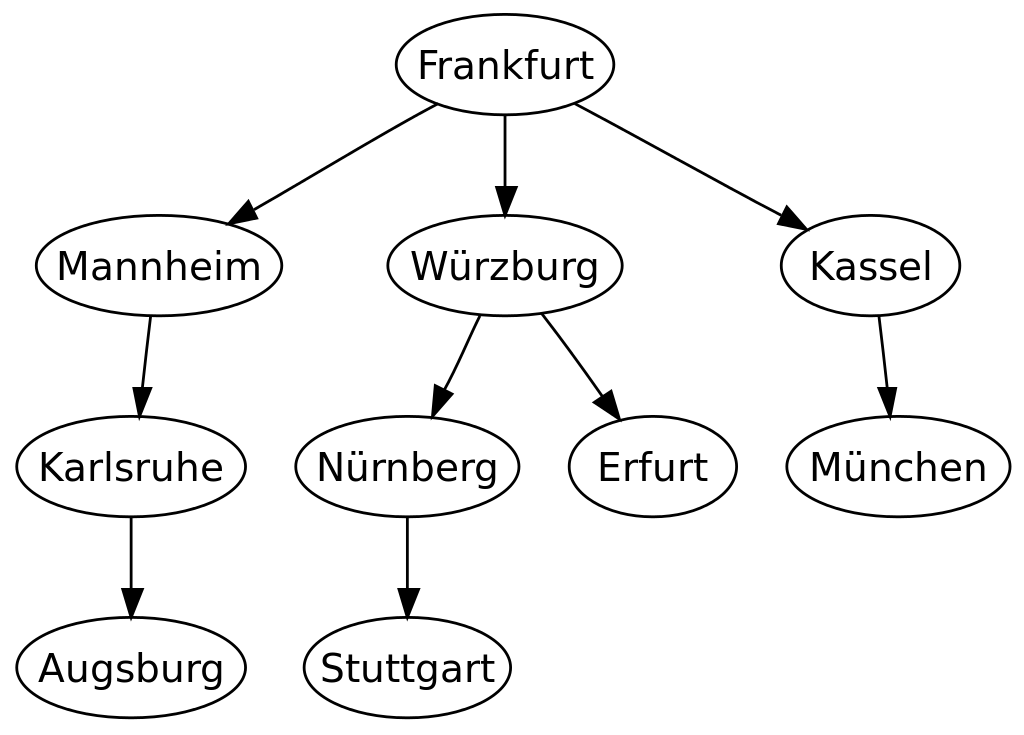

The following is an example of the breadth-first tree obtained by running a BFS on German cities starting from Frankfurt:

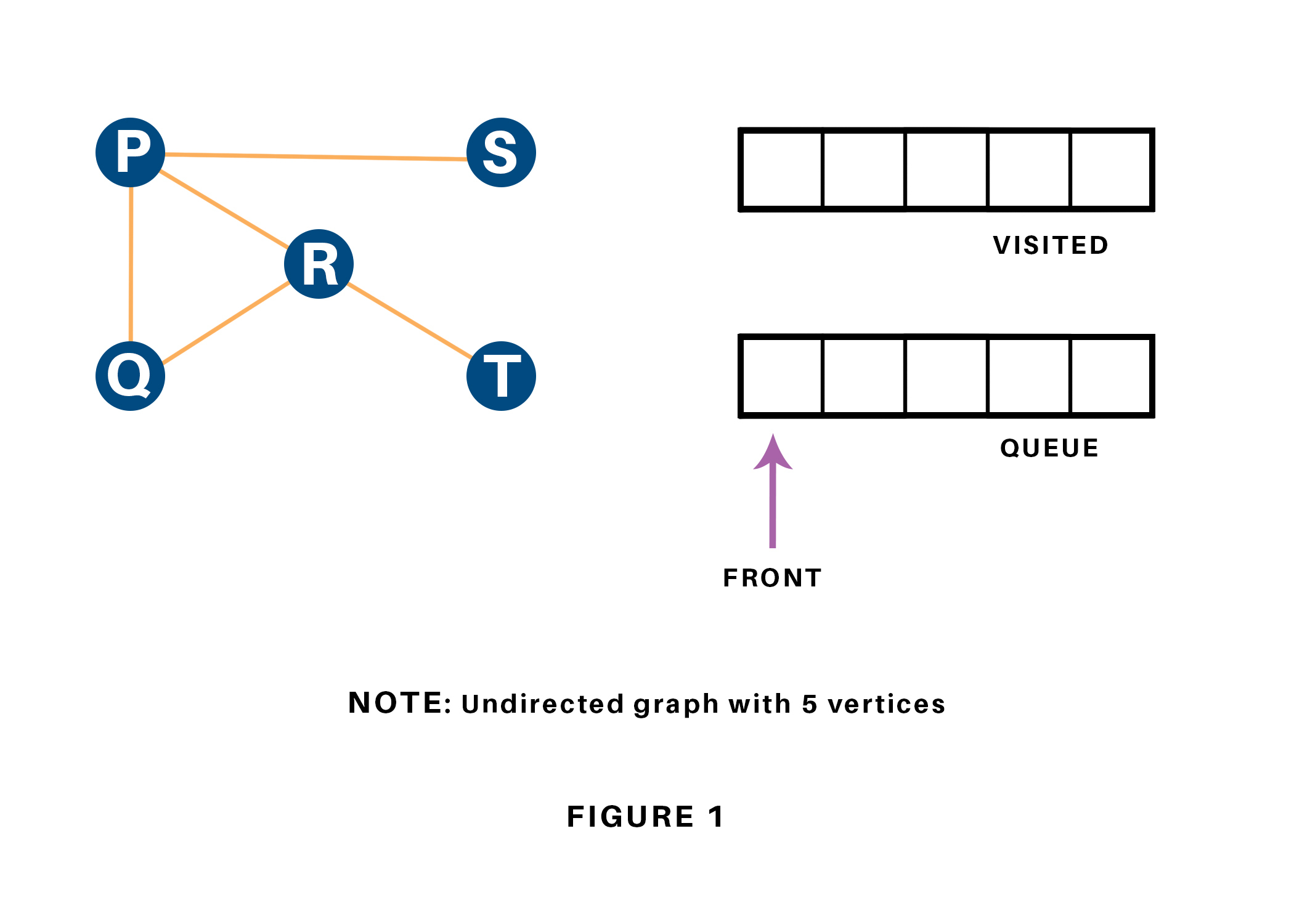

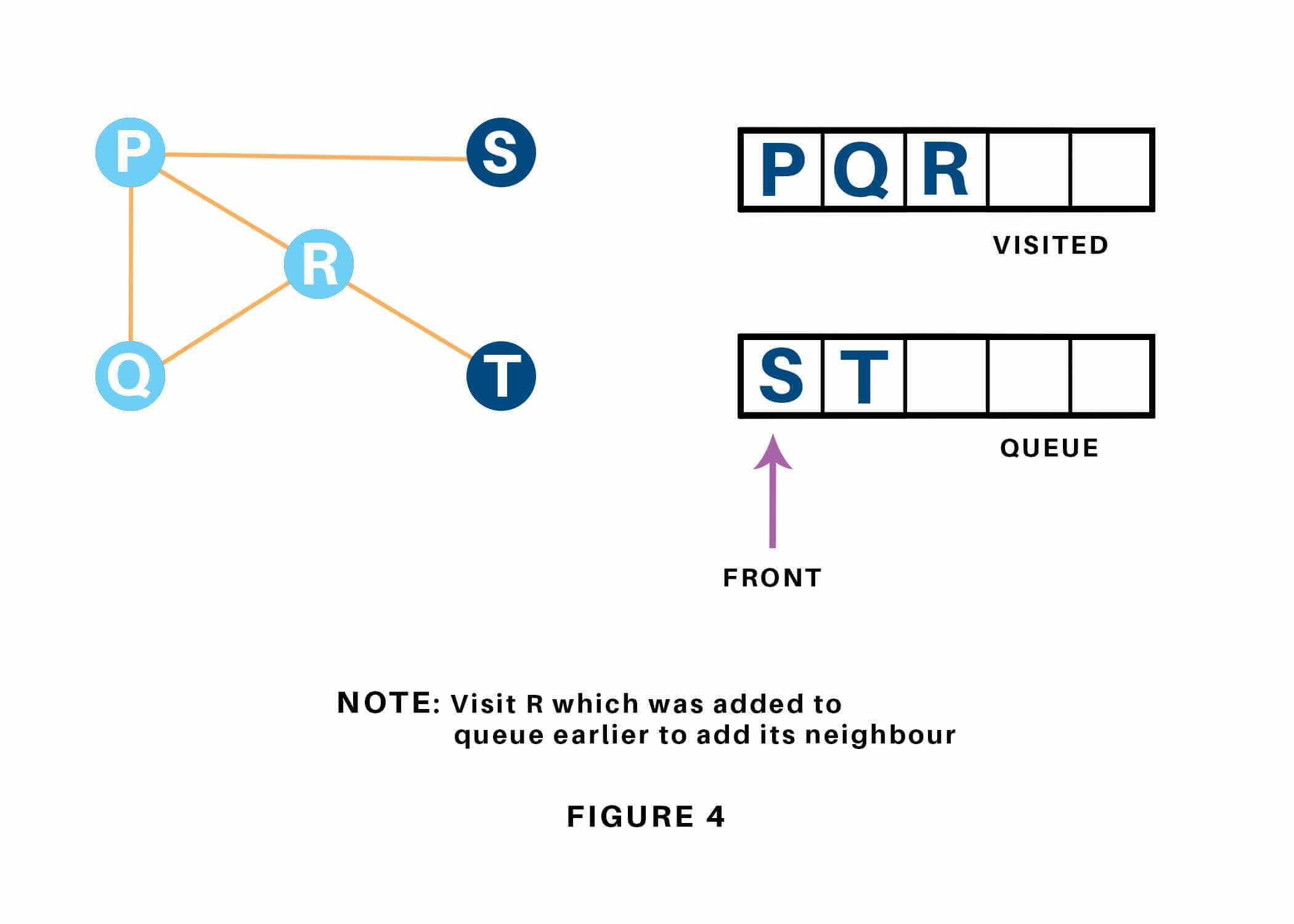

Let us see how this algorithm works with an example. Here, we will use an undirected graph with 5 vertices. Refer to 1.12.

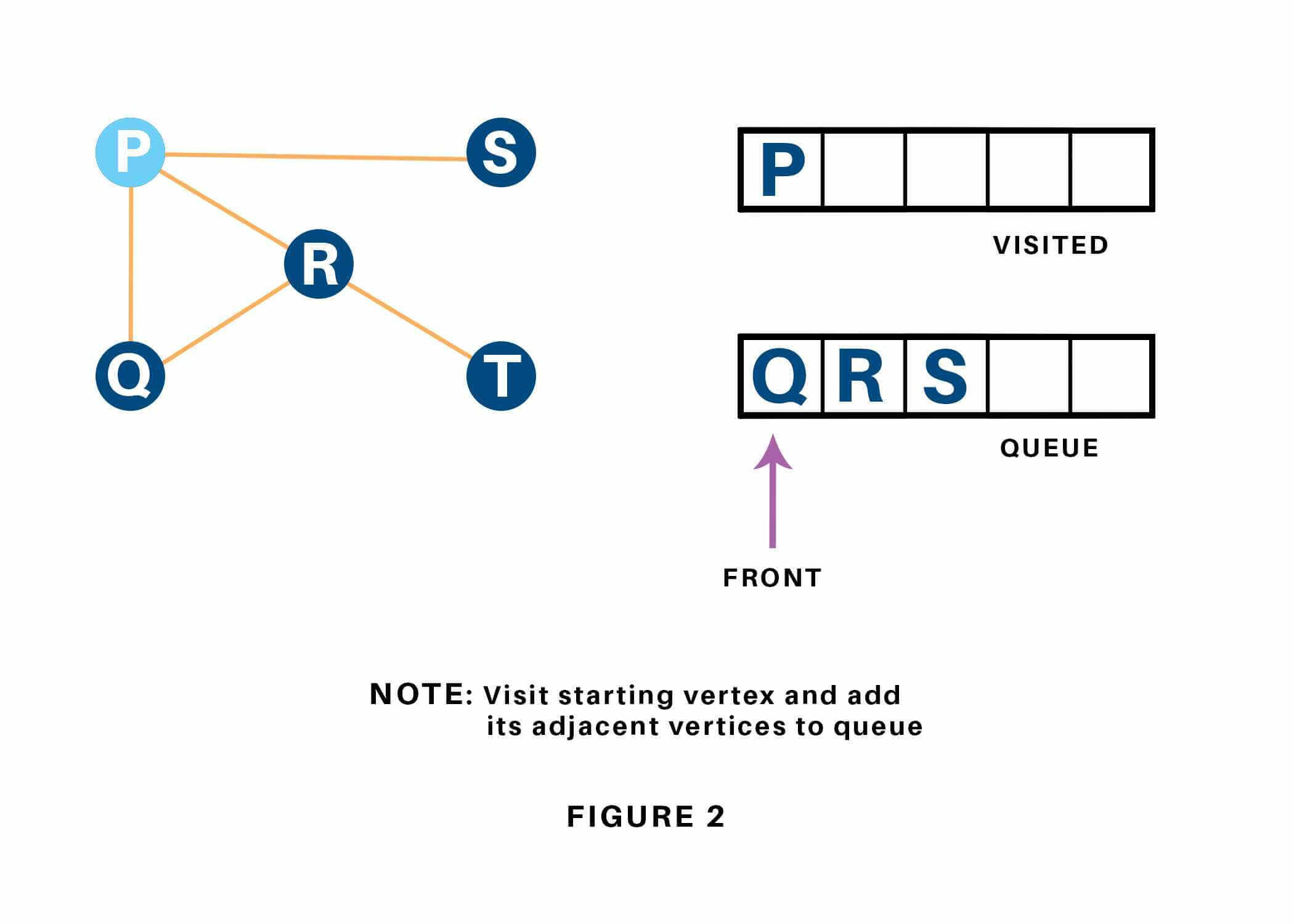

We begin from the vertex P, the BFS algorithmic program starts by putting it within the Visited list and puts all its adjacent vertices within the stack. Refer to 1.13

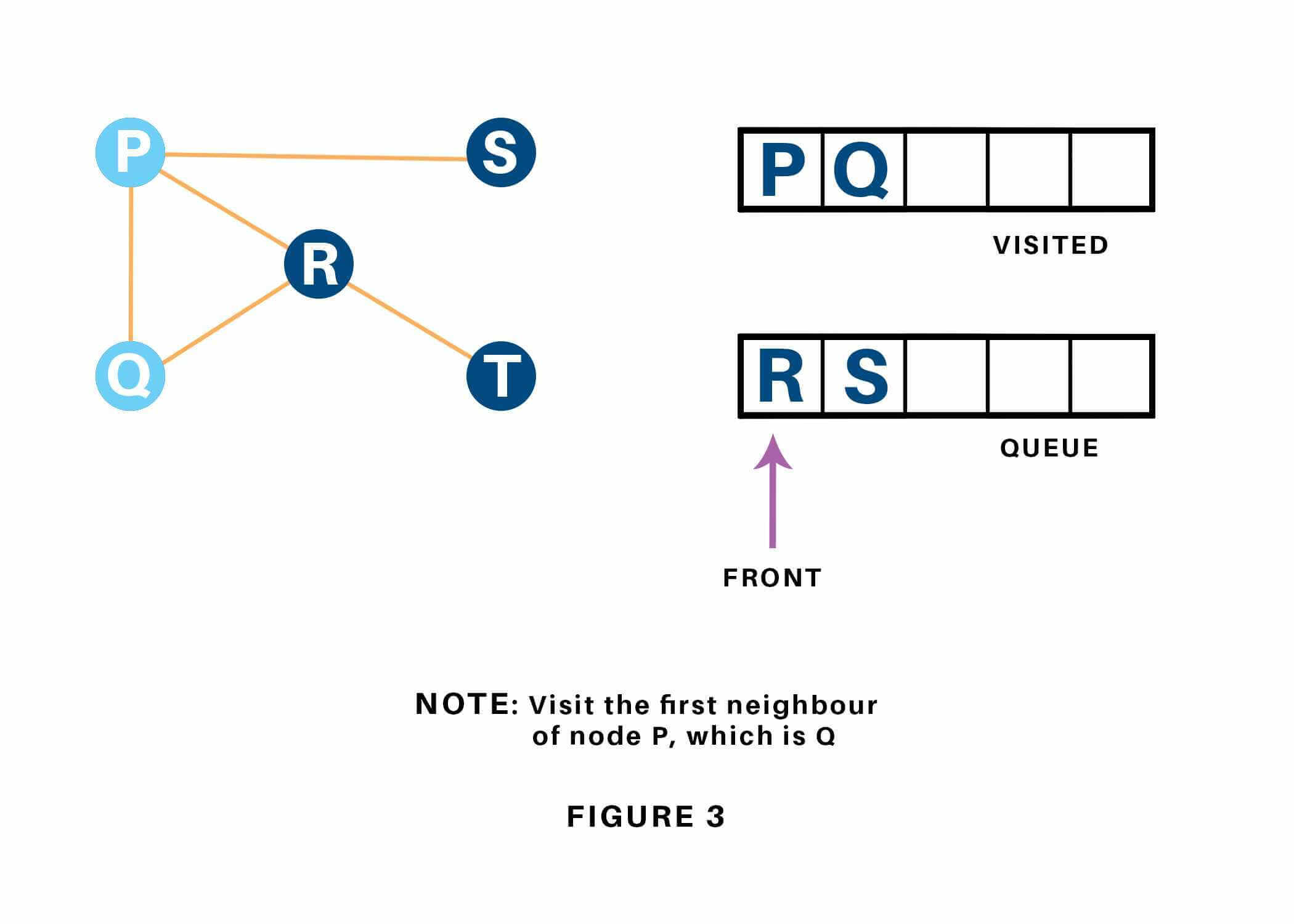

Next, we have a tendency to visit the part at the front of the queue i.e. Q and visit its adjacent nodes. Since P has already been visited, we have a tendency to visit R instead. Refer to ??

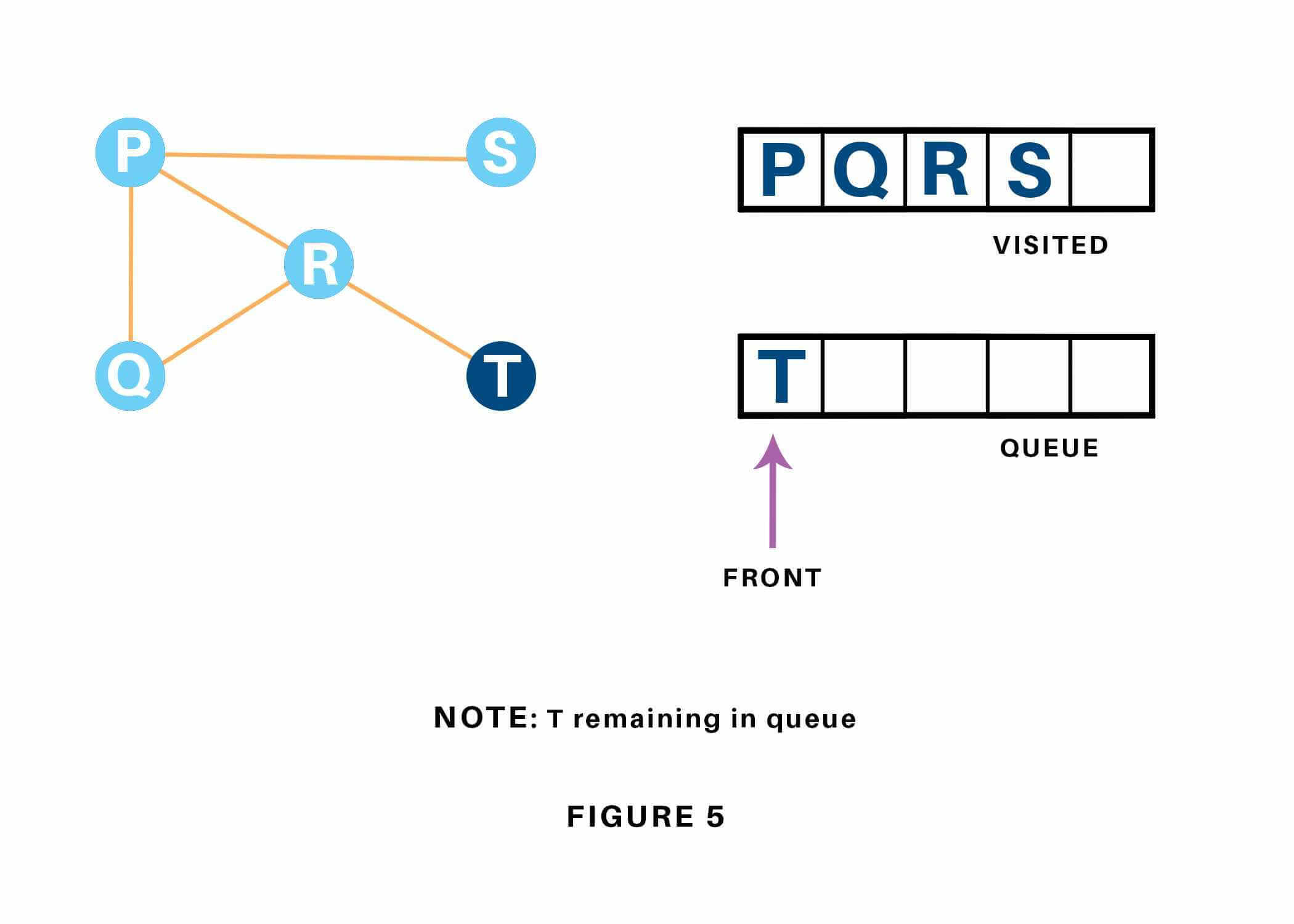

Vertex R has an unvisited adjacent vertex in T, thus we have a tendency to add that to the rear of the queue and visit S, which is at the front of the queue. Refer to 1.15 & 1.16.

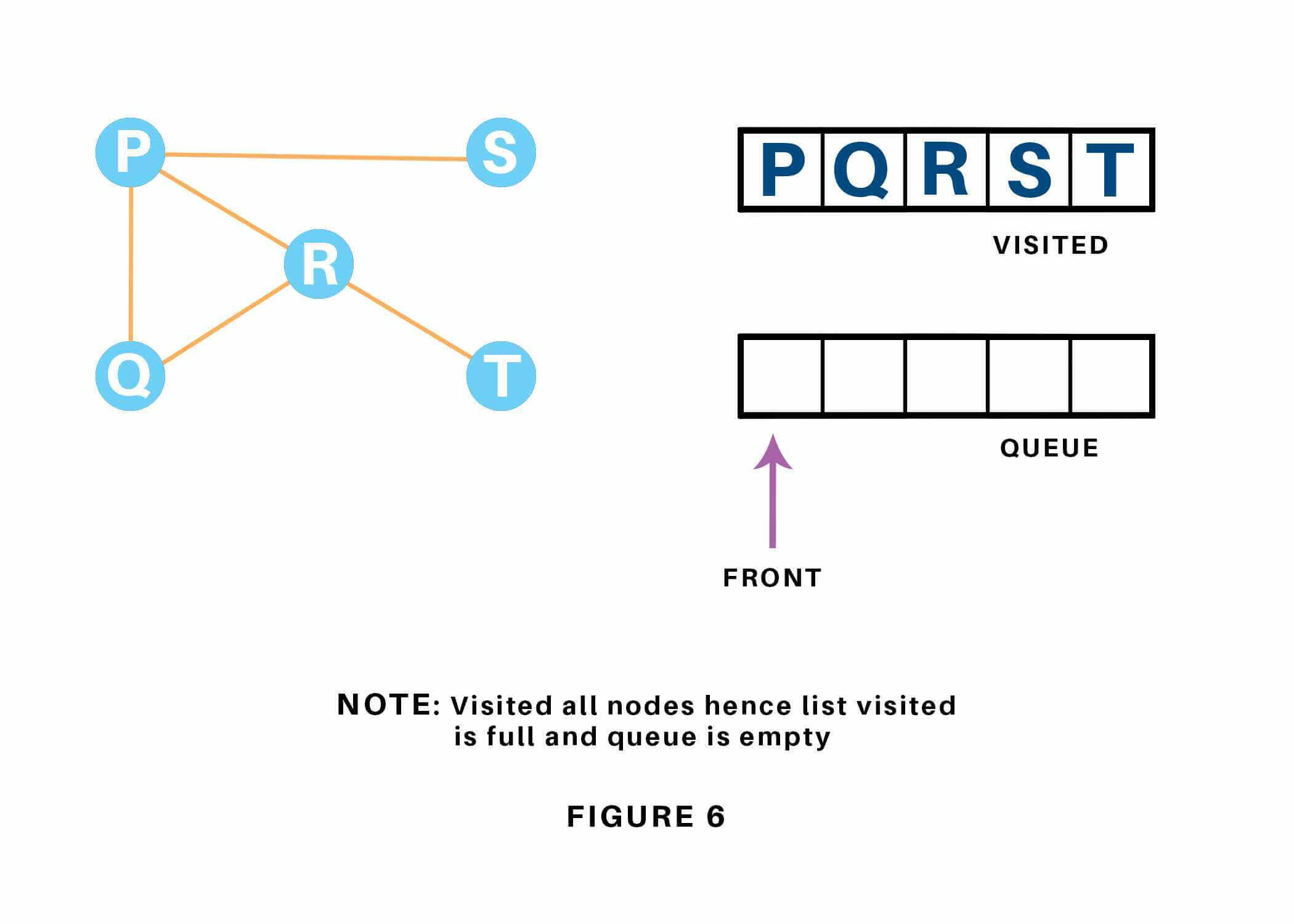

Now, only T remains within the queue since the only adjacent node of S i.e. P is already visited. We have a tendency to visit it. Refer to 1.17

Since the queue is empty, we’ve completed the Traversal of the graph. The time complexity of the Breadth first Search algorithm is in the form of , where is the representation of the number of nodes and is the number of edges. Also, the space complexity of the BFS algorithm is .

The time complexity can be expressed as , since every vertex and every edge will be explored in the worst case. is the number of vertices and is the number of edges in the graph. Note that may vary between and , depending on how sparse the input graph is.

When the number of vertices in the graph is known ahead of time, and additional data structures are used to determine which vertices have already been added to the queue, the space complexity can be expressed as , where is the number of vertices. This is in addition to the space required for the graph itself, which may vary depending on the graph representation used by an implementation of the algorithm.

When working with graphs that are too large to store explicitly (or infinite), it is more practical to describe the complexity of breadth-first search in different terms: to find the nodes that are at distance d from the start node (measured in number of edge traversals), BFS takes time and memory, where b is the “branching factor” of the graph (the average out-degree).

In the analysis of algorithms, the input to breadth-first search is assumed to be a finite graph, represented as an adjacency list, adjacency matrix, or similar representation. However, in the application of graph traversal methods in artificial intelligence the input may be an implicit representation of an infinite graph. In this context, a search method is described as being complete if it is guaranteed to find a goal state if one exists. Breadth-first search is complete, but depth-first search is not. When applied to infinite graphs represented implicitly, breadth-first search will eventually find the goal state, but depth first search may get lost in parts of the graph that have no goal state and never return.

An enumeration of the vertices of a graph is said to be a BFS ordering if it is the possible output of the application of BFS to this graph.

Let be a graph with vertices. Recall that is the set of neighbors of . Let be a list of distinct elements of , for , let be the least such that is a neighbor of , if such a exists, and be otherwise.

Let be an enumeration of the vertices of . The enumeration is said to be a BFS ordering (with source ) if, for all , is the vertex such that is minimal. Equivalently, is a BFS ordering if, for all with , there exists a neighbor of such that .

Breadth-first search can be used to solve many problems in graph theory, for example:

Copying garbage collection, Cheney’s algorithm

Finding the shortest path between two nodes u and v, with path length measured by number of edges (an advantage over depth-first search)

(Reverse) CuthillMcKee mesh numbering

FordFulkerson method for computing the maximum flow in a flow network

Serialization/Deserialization of a binary tree vs serialization in sorted order, allows the tree to be re-constructed in an efficient manner.

Construction of the failure function of the Aho-Corasick pattern matcher.

Testing bipartiteness of a graph.

Implementing parallel algorithms for computing a graph’s transitive closure.

Breadth-First Traversal (or Search) for a graph is similar to Breadth-First Traversal of a tree. The only catch here is, unlike trees, graphs may contain cycles, so we may come to the same node again. To avoid processing a node more than once, we use a boolean visited array. For simplicity, it is assumed that all vertices are reachable from the starting vertex.

For example, in the following graph, we start traversal from vertex 2. When we come to vertex 0, we look for all adjacent vertices of it. 2 is also an adjacent vertex of 0. If we dont mark visited vertices, then 2 will be processed again and it will become a non-terminating process. A Breadth-First Traversal of the following graph is 2, 0, 3, 1.

1# Python3 Program to print BFS traversal

2# from a given source vertex. BFS(int s)

3# traverses vertices reachable from s.

4from collections import defaultdict

5

6# This class represents a directed graph

7# using adjacency list representation

8class Graph:

9

10 # Constructor

11 def __init__(self):

12

13 # default dictionary to store graph

14 self.graph = defaultdict(list)

15

16 # function to add an edge to graph

17 def addEdge(self,u,v):

18 self.graph[u].append(v)

19

20 # Function to print a BFS of graph

21 def BFS(self, s):

22

23 # Mark all the vertices as not visited

24 visited = [False] * (max(self.graph) + 1)

25

26 # Create a queue for BFS

27 queue = []

28

29 # Mark the source node as

30 # visited and enqueue it

31 queue.append(s)

32 visited[s] = True

33

34 while queue:

35

36 # Dequeue a vertex from

37 # queue and print it

38 s = queue.pop(0)

39 print (s, end = " ")

40

41 # Get all adjacent vertices of the

42 # dequeued vertex s. If a adjacent

43 # has not been visited, then mark it

44 # visited and enqueue it

45 for i in self.graph[s]:

46 if visited[i] == False:

47 queue.append(i)

48 visited[i] = True

49

50# Driver code

51

52# Create a graph given in

53# the above diagram

54g = Graph()

55g.addEdge(0, 1)

56g.addEdge(0, 2)

57g.addEdge(1, 2)

58g.addEdge(2, 0)

59g.addEdge(2, 3)

60g.addEdge(3, 3)

61

62print ("Following is Breadth First Traversal"

63 " (starting from vertex 2)")

64g.BFS(2)

65

In computer science, iterative deepening search or more specifically iterative deepening depth-first search (IDS or IDDFS) is a state space/graph search strategy in which a depth-limited version of depth-first search is run repeatedly with increasing depth limits until the goal is found. IDDFS is optimal like breadth-first search, but uses much less memory; at each iteration, it visits the nodes in the search tree in the same order as depth-first search, but the cumulative order in which nodes are first visited is effectively breadth-first. 11

IDDFS combines depth-first search’s space-efficiency and breadth-first search’s completeness (when the branching factor is finite). If a solution exists, it will find a solution path with the fewest arcs. Since iterative deepening visits states multiple times, it may seem wasteful, but it turns out to be not so costly, since in a tree most of the nodes are in the bottom level, so it does not matter much if the upper levels are visited multiple times.

The main advantage of IDDFS in game tree searching is that the earlier searches tend to improve the commonly used heuristics, such as the killer heuristic and alphabeta pruning, so that a more accurate estimate of the score of various nodes at the final depth search can occur, and the search completes more quickly since it is done in a better order. For example, alphabeta pruning is most efficient if it searches the best moves first. 12

A second advantage is the responsiveness of the algorithm. Because early iterations use small values for , they execute extremely quickly. This allows the algorithm to supply early indications of the result almost immediately, followed by refinements as increases. When used in an interactive setting, such as in a chess-playing program, this facility allows the program to play at any time with the current best move found in the search it has completed so far. This can be phrased as each depth of the search corecursively producing a better approximation of the solution, though the work done at each step is recursive. This is not possible with a traditional depth-first search, which does not produce intermediate results.

The time complexity of IDDFS in a (well-balanced) tree works out to be the same as breadth-first search, i.e. where is the branching factor and is the depth of the goal.

In an iterative deepening search, the nodes at depth are expanded once, those at depth are expanded twice, and so on up to the root of the search tree, which is expanded times.? So the total number of expansions in an iterative deepening search is

where is the number of expansions at depth , is the number of expansions at depth , and so on. Factoring out gives

Now let . Then we have

This is less than the infinite series

which converges to

That is, we have

, for

Since or is a constant independent of (the depth), if (i.e., if the branching factor is greater than 1), the running time of the depth-first iterative deepening search is .

For and the number is

All together, an iterative deepening search from depth all the way down to depth expands only about more nodes than a single breadth-first or depth-limited search to depth , when . The higher the branching factor, the lower the overhead of repeatedly expanded states, but even when the branching factor is 2, iterative deepening search only takes about twice as long as a complete breadth-first search. This means that the time complexity of iterative deepening is still .

The space complexity of IDDFS is , where is the depth of the goal.

Since IDDFS, at any point, is engaged in a depth-first search, it need only store a stack of nodes which represents the branch of the tree it is expanding. Since it finds a solution of optimal length, the maximum depth of this stack is , and hence the maximum amount of space is . In general, iterative deepening is the preferred search method when there is a large search space and the depth of the solution is not known.

The Iterative Deepening Depth-First Search (also ID-DFS) algorithm is an algorithm used to find a node in a tree. This means that given a tree data structure, the algorithm will return the first node in this tree that matches the specified condition. Nodes are sometimes referred to as vertices (plural of vertex) - here, we’ll call them nodes. The edges have to be unweighted. This algorithm can also work with unweighted graphs if mechanism to keep track of already visited nodes is added.

The basic principle of the algorithm is to start with a start node, and then look at the first child of this node. It then looks at the first child of that node (grandchild of the start node) and so on, until a node has no more children (weve reached a leaf node). It then goes up one level, and looks at the next child. If there are no more children, it goes up one more level, and so on, until it find more children or reaches the start node. If hasnt found the goal node after returning from the last child of the start node, the goal node cannot be found, since by then all nodes have been traversed.

So far this has been describing Depth-First Search (DFS). Iterative deepening adds to this, that the algorithm not only returns one layer up the tree when the node has no more children to visit, but also when a previously specified maximum depth has been reached. Also, if we return to the start node, we increase the maximum depth and start the search all over, until weve visited all leaf nodes (bottom nodes) and increasing the maximum depth wont lead to us visiting more nodes.

Specifically, these are the steps:

For each child of the current node

If it is the target node, return

If the current maximum depth is reached, return

Set the current node to this node and go back to 1.

After having gone through all children, go to the next child of the parent (the next sibling)

After having gone through all children of the start node, increase the maximum depth and go back to 1.

If we have reached all leaf (bottom) nodes, the goal node doesnt exist.

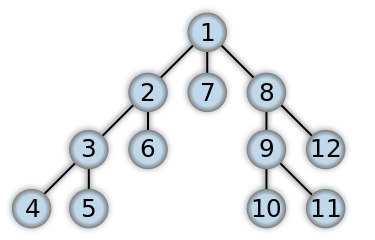

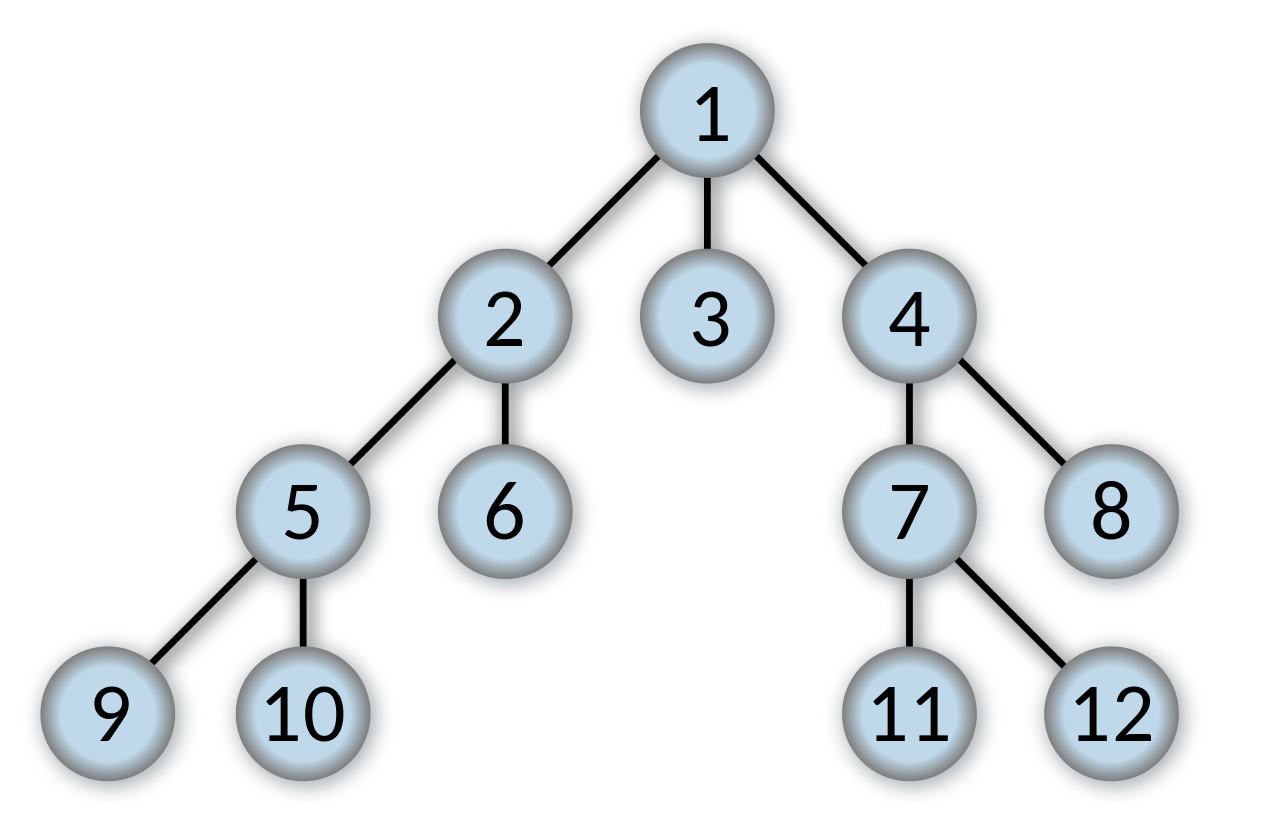

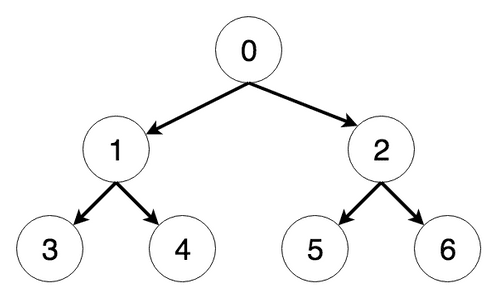

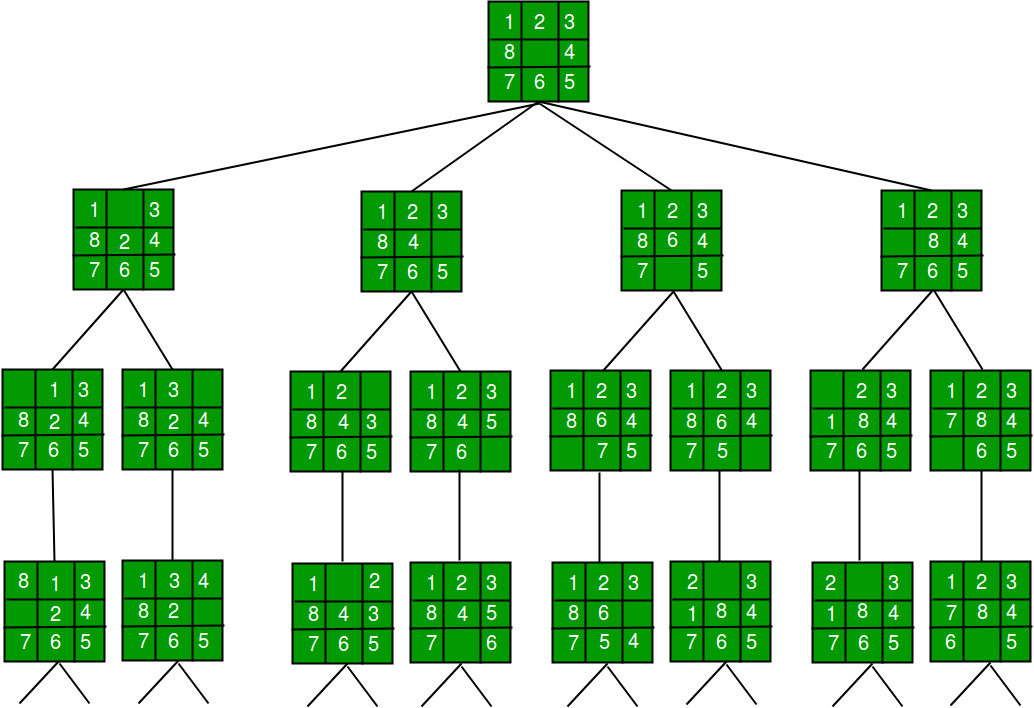

Consider the following tree (see 1.18):

The steps the algorithm performs on this tree if given node 0 as a starting point, in order, are:

Visiting Node 0

Visiting Node 1

Current maximum depth reached, returning

Visiting Node 2

Current maximum depth reached, returning

Increasing depth to 2

Visiting Node 0

Visiting Node 1

Visiting Node 3

Current maximum depth reached, returning

Visiting Node 4

Current maximum depth reached, returning

Visiting Node 2

Visiting Node 5

Current maximum depth reached, returning

Visiting Node 6

Found the node were looking for, returning

If we double the maximum depth each time we need to go deeper, the runtime complexity of Iterative Deepening Depth-First Search (ID-DFS) is the same as regular Depth-First Search (DFS), since all previous depths added up will have the same runtime as the current depth . The runtime of regular Depth-First Search (DFS) is = number of Nodes in the tree), since every node is traversed at most once. The number of nodes is equal to , where b is the branching factor and d is the depth, so the runtime can be rewritten as .

The space complexity of Iterative Deepening Depth-First Search (ID-DFS) is the same as regular Depth-First Search (DFS), which is, if we exclude the tree itself, O(d), with d being the depth, which is also the size of the call stack at maximum depth. If we include the tree, the space complexity is the same as the runtime complexity, as each node needs to be saved.

1# Python program to print DFS traversal from a given

2# given graph

3from collections import defaultdict

4

5# This class represents a directed graph using adjacency

6# list representation

7class Graph:

8

9 def __init__(self,vertices):

10

11 # No. of vertices

12 self.V = vertices

13

14 # default dictionary to store graph

15 self.graph = defaultdict(list)

16

17 # function to add an edge to graph

18 def addEdge(self,u,v):

19 self.graph[u].append(v)

20

21 # A function to perform a Depth-Limited search

22 # from given source ’src’

23 def DLS(self,src,target,maxDepth):

24

25 if src == target : return True

26

27 # If reached the maximum depth, stop recursing.

28 if maxDepth <= 0 : return False

29

30 # Recur for all the vertices adjacent to this vertex

31 for i in self.graph[src]:

32 if(self.DLS(i,target,maxDepth-1)):

33 return True

34 return False

35

36 # IDDFS to search if target is reachable from v.

37 # It uses recursive DLS()

38 def IDDFS(self,src, target, maxDepth):

39

40 # Repeatedly depth-limit search till the

41 # maximum depth

42 for i in range(maxDepth):

43 if (self.DLS(src, target, i)):

44 return True

45 return False

46

47# Create a graph given in the above diagram

48g = Graph (7);

49g.addEdge(0, 1)

50g.addEdge(0, 2)

51g.addEdge(1, 3)

52g.addEdge(1, 4)

53g.addEdge(2, 5)

54g.addEdge(2, 6)

55

56target = 6; maxDepth = 3; src = 0

57

58if g.IDDFS(src, target, maxDepth) == True:

59 print ("Target is reachable from source " +

60 "within max depth")

61else :

62 print ("Target is NOT reachable from source " +

63 "within max depth")

67

Also known as Best-first search. Best-first search is a class of search algorithms, which explore a graph by expanding the most promising node chosen according to a specified rule. Judea Pearl described the best-first search as estimating the promise of node n by a “heuristic evaluation function which, in general, may depend on the description of n, the description of the goal, the information gathered by the search up to that point, and most importantly, on any extra knowledge about the problem domain.”

Some authors have used “best-first search” to refer specifically to a search with a heuristic that attempts to predict how close the end of a path is to a solution (or, goal), so that paths which are judged to be closer to a solution (or, goal) are extended first. This specific type of search is called greedy best-first search or pure heuristic search. Efficient selection of the current best candidate for extension is typically implemented using a priority queue.

The A* search algorithm is an example of a best-first search algorithm, as is B*. Best-first algorithms are often used for path finding in combinatorial search. Neither A* nor B* is a greedy best-first search, as they incorporate the distance from the start in addition to estimated distances to the goal. Using a greedy algorithm, expand the first successor of the parent. After a successor is generated:

If the successor’s heuristic is better than its parent, the successor is set at the front of the queue (with the parent reinserted directly behind it), and the loop restarts.

Else, the successor is inserted into the queue (in a location determined by its heuristic value). The procedure will evaluate the remaining successors (if any) of the parent.

In BFS and DFS, when we are at a node, we can consider any of the adjacent as next node. So both BFS and DFS blindly explore paths without considering any cost function. The idea of Best First Search is to use an evaluation function to decide which adjacent is most promising and then explore. Best First Search falls under the category of Heuristic Search or Informed Search. We use a priority queue to store costs of nodes. So the implementation is a variation of BFS, we just need to change Queue to PriorityQueue. Let us consider the below example.

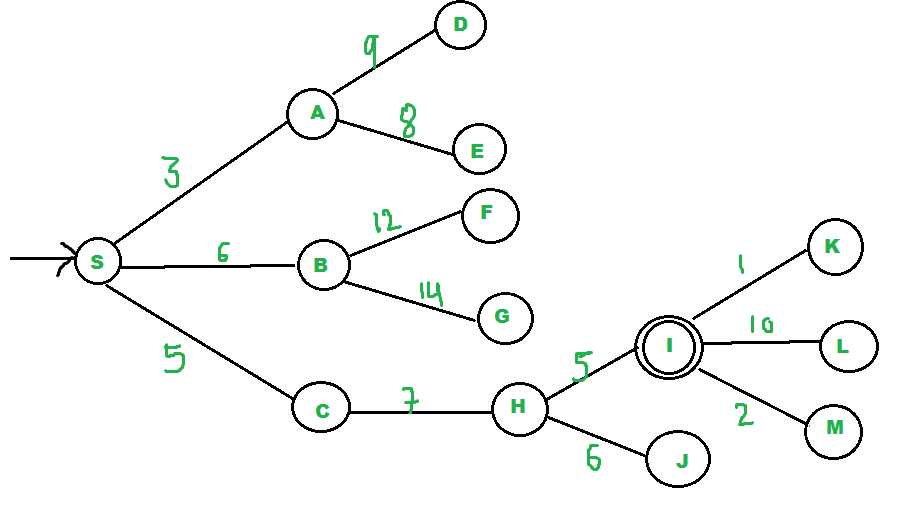

We start from source “S” and search for goal “I” using given costs and Best First search.

“pq” initially contains “S”. We remove “s” from and process unvisited neighbors of “S” to “pq”. “pq” now contains (C is put before B because C has lesser cost).

We remove “A” from “pq” and process unvisited neighbors of “A” to “pq”. “pq” now contains .

We remove “C” from “pq” and process unvisited neighbors of “C” to “pq”. “pq” now contains .

We remove “B” from “pq” and process unvisited neighbors of “B” to “pq”. “pq” now contains .

We remove “H” from “pq”. Since our goal “I” is a neighbor of “H”, we return.

Below is the implementation of the above idea:

1from queue import PriorityQueue

2v = 14

3graph = [[] for i in range(v)]

4

5# Function For Implementing Best First Search

6# Gives output path having lowest cost

7

8

9def best_first_search(source, target, n):

10 visited = [False] * n

11 visited = True

12 pq = PriorityQueue()

13 pq.put((0, source))

14 while pq.empty() == False:

15 u = pq.get()[1]

16 # Displaying the path having lowest cost

17 print(u, end=" ")

18 if u == target:

19 break

20

21 for v, c in graph[u]:

22 if visited[v] == False:

23 visited[v] = True

24 pq.put((c, v))

25 print()

26

27# Function for adding edges to graph

28

29

30def addedge(x, y, cost):

31 graph[x].append((y, cost))

32 graph[y].append((x, cost))

33

34

35# The nodes shown in above example(by alphabets) are

36# implemented using integers addedge(x,y,cost);

37addedge(0, 1, 3)

38addedge(0, 2, 6)

39addedge(0, 3, 5)

40addedge(1, 4, 9)

41addedge(1, 5, 8)

42addedge(2, 6, 12)

43addedge(2, 7, 14)

44addedge(3, 8, 7)

45addedge(8, 9, 5)

46addedge(8, 10, 6)

47addedge(9, 11, 1)

48addedge(9, 12, 10)

49addedge(9, 13, 2)

50

51source = 0

52target = 9

53best_first_search(source, target, v)

54

Output

0 1 3 2 8 9

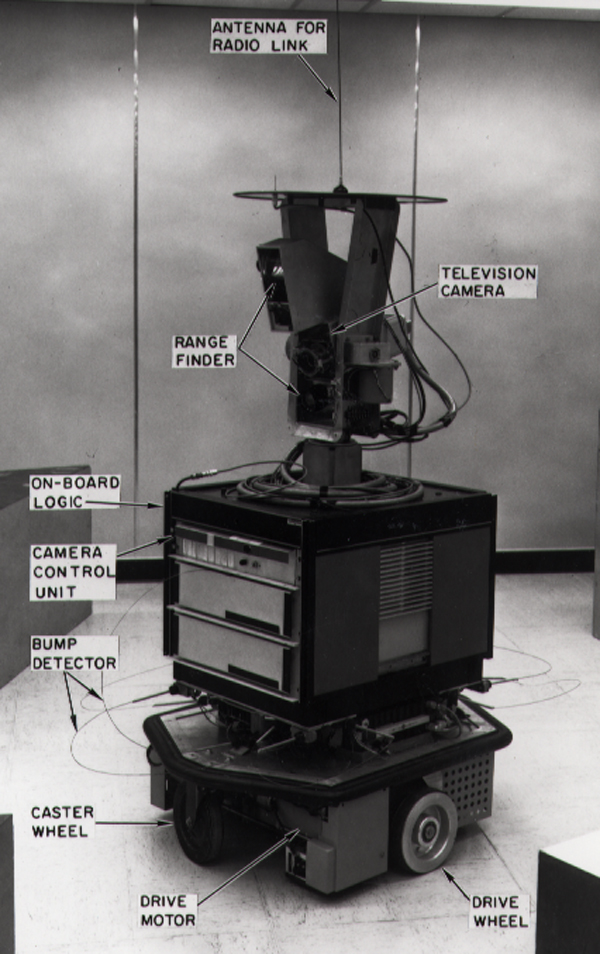

A* (“A-star”) is a graph traversal and path search algorithm, which is often used in many fields of computer science due to its completeness, optimality, and optimal efficiency. 13 One major practical drawback is its space complexity, as it stores all generated nodes in memory. Thus, in practical travel-routing systems, it is generally outperformed by algorithms which can pre-process the graph to attain better performance, as well as memory-bounded approaches; however, A* is still the best solution in many cases. Peter Hart, Nils Nilsson and Bertram Raphael of Stanford Research Institute (now SRI International) first published the algorithm in 1968. 14 It can be seen as an extension of Dijkstra’s algorithm. A* achieves better performance by using heuristics to guide its search.

A* was created as part of the Shakey project, which had the aim of building a mobile robot that could plan its own actions. Nils Nilsson originally proposed using the Graph Traverser algorithm for Shakey’s path planning. Graph Traverser is guided by a heuristic function h(n), the estimated distance from node n to the goal node: it entirely ignores g(n), the distance from the start node to n. Bertram Raphael suggested using the sum, g(n) + h(n). Peter Hart invented the concepts we now call admissibility and consistency of heuristic functions. A* was originally designed for finding least-cost paths when the cost of a path is the sum of its costs, but it has been shown that A* can be used to find optimal paths for any problem satisfying the conditions of a cost algebra.

The original 1968 A* paper contained a theorem stating that no A*-like algorithm could expand fewer nodes than A* if the heuristic function is consistent and A*’s tie-breaking rule is suitably chosen. 15 A “correction” was published a few years later[8] claiming that consistency was not required, but this was shown to be false in Dechter and Pearl’s definitive study of A*’s optimality (now called optimal efficiency), which gave an example of A* with a heuristic that was admissible but not consistent expanding arbitrarily more nodes than an alternative A*-like algorithm.

A* is an informed search algorithm, or a best-first search, meaning that it is formulated in terms of weighted graphs: starting from a specific starting node of a graph, it aims to find a path to the given goal node having the smallest cost (least distance travelled, shortest time, etc.). It does this by maintaining a tree of paths originating at the start node and extending those paths one edge at a time until its termination criterion is satisfied.

At each iteration of its main loop, A* needs to determine which of its paths to extend. It does so based on the cost of the path and an estimate of the cost required to extend the path all the way to the goal. Specifically, A* selects the path that minimizes

where n is the next node on the path, is the cost of the path from the start node to , and is a heuristic function that estimates the cost of the cheapest path from n to the goal. A* terminates when the path it chooses to extend is a path from start to goal or if there are no paths eligible to be extended. The heuristic function is problem-specific. If the heuristic function is admissible, meaning that it never overestimates the actual cost to get to the goal, A* is guaranteed to return a least-cost path from start to goal.

Typical implementations of A* use a priority queue to perform the repeated selection of minimum (estimated) cost nodes to expand. This priority queue is known as the open set or fringe. At each step of the algorithm, the node with the lowest value is removed from the queue, the f and g values of its neighbors are updated accordingly, and these neighbors are added to the queue. The algorithm continues until a removed node (thus the node with the lowest f value out of all fringe nodes) is a goal node. The f value of that goal is then also the cost of the shortest path, since h at the goal is zero in an admissible heuristic.

The algorithm described so far gives us only the length of the shortest path. To find the actual sequence of steps, the algorithm can be easily revised so that each node on the path keeps track of its predecessor. After this algorithm is run, the ending node will point to its predecessor, and so on, until some node’s predecessor is the start node.

As an example, when searching for the shortest route on a map, might represent the straight-line distance to the goal, since that is physically the smallest possible distance between any two points. For a grid map from a video game, using the Manhattan distance or the octile distance becomes better depending on the set of movements available (4-way or 8-way).

If the heuristic satisfies the additional condition for every edge of the graph (where denotes the length of that edge), then is called monotone, or consistent. With a consistent heuristic, A* is guaranteed to find an optimal path without processing any node more than once and A* is equivalent to running Dijkstra’s algorithm with the reduced cost .

The time complexity of A* depends on the heuristic. In the worst case of an unbounded search space, the number of nodes expanded is exponential in the depth of the solution (the shortest path) , where is the branching factor (the average number of successors per state). This assumes that a goal state exists at all, and is reachable from the start state; if it is not, and the state space is infinite, the algorithm will not terminate.

The heuristic function has a major effect on the practical performance of A* search, since a good heuristic allows A* to prune away many of the bd nodes that an uninformed search would expand. Its quality can be expressed in terms of the effective branching factor b*, which can be determined empirically for a problem instance by measuring the number of nodes generated by expansion, N, and the depth of the solution, then solving

Good heuristics are those with low effective branching factor (the optimal being ). The time complexity is polynomial when the search space is a tree, there is a single goal state, and the heuristic function h meets the following condition:

where is the optimal heuristic, the exact cost to get from to the goal. In other words, the error of will not grow faster than the logarithm of the “perfect heuristic” that returns the true distance from x to the goal. The space complexity of A* is roughly the same as that of all other graph search algorithms, as it keeps all generated nodes in memory. In practice, this turns out to be the biggest drawback of A* search, leading to the development of memory-bounded heuristic searches, such as Iterative deepening A*, memory bounded A*, and SMA*.

This is a direct implementation of A* on a graph structure. The heuristic function is defined as 1 for all nodes for the sake of simplicity and brevity. The graph is represented with an adjacency list, where the keys represent graph nodes, and the values contain a list of edges with the the corresponding neighboring nodes. Here you’ll find the A* algorithm implemented in Python:

1from collections import deque

2

3class Graph:

4 # example of adjacency list (or rather map)

5 # adjacency_list = {

6 # ’A’: [(’B’, 1), (’C’, 3), (’D’, 7)],

7 # ’B’: [(’D’, 5)],

8 # ’C’: [(’D’, 12)]

9 # }

10

11 def __init__(self, adjacency_list):

12 self.adjacency_list = adjacency_list

13

14 def get_neighbors(self, v):

15 return self.adjacency_list[v]

16

17 # heuristic function with equal values for all nodes

18 def h(self, n):

19 H = {

20 ’A’: 1,

21 ’B’: 1,

22 ’C’: 1,

23 ’D’: 1

24 }

25

26 return H[n]

27

28 def a_star_algorithm(self, start_node, stop_node):

29 # open_list is a list of nodes which have been visited, but who’s neighbors

30 # haven’t all been inspected, starts off with the start node

31 # closed_list is a list of nodes which have been visited

32 # and who’s neighbors have been inspected

33 open_list = set([start_node])

34 closed_list = set([])

35

36 # g contains current distances from start_node to all other nodes

37 # the default value (if it’s not found in the map) is +infinity

38 g = {}

39

40 g[start_node] = 0

41

42 # parents contains an adjacency map of all nodes

43 parents = {}

44 parents[start_node] = start_node

45

46 while len(open_list) > 0:

47 n = None

48

49 # find a node with the lowest value of f() - evaluation function

50 for v in open_list:

51 if n == None or g[v] + self.h(v) < g[n] + self.h(n):

52 n = v;

53

54 if n == None:

55 print(’Path does not exist!’)

56 return None

57

58 # if the current node is the stop_node

59 # then we begin reconstructin the path from it to the start_node

60 if n == stop_node:

61 reconst_path = []

62

63 while parents[n] != n:

64 reconst_path.append(n)

65 n = parents[n]

66

67 reconst_path.append(start_node)

68

69 reconst_path.reverse()

70

71 print(’Path found: {}’.format(reconst_path))

72 return reconst_path

73

74 # for all neighbors of the current node do

75 for (m, weight) in self.get_neighbors(n):

76 # if the current node isn’t in both open_list and closed_list

77 # add it to open_list and note n as it’s parent

78 if m not in open_list and m not in closed_list:

79 open_list.add(m)

80 parents[m] = n

81 g[m] = g[n] + weight

82

83 # otherwise, check if it’s quicker to first visit n, then m

84 # and if it is, update parent data and g data

85 # and if the node was in the closed_list, move it to open_list

86 else:

87 if g[m] > g[n] + weight:

88 g[m] = g[n] + weight

89 parents[m] = n

90

91 if m in closed_list:

92 closed_list.remove(m)

93 open_list.add(m)

94

95 # remove n from the open_list, and add it to closed_list

96 # because all of his neighbors were inspected

97 open_list.remove(n)

98 closed_list.add(n)

99

100 print(’Path does not exist!’)

101 return None

102

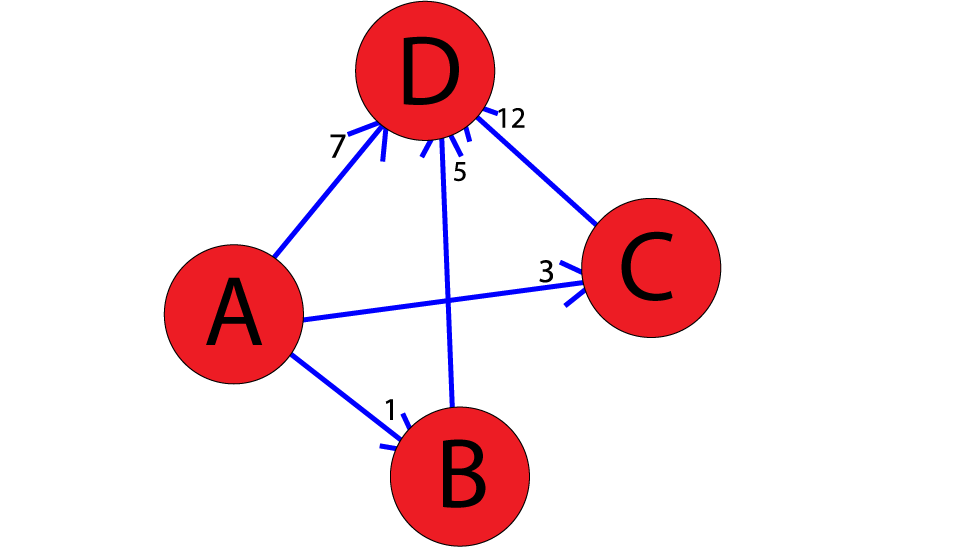

Let’s look at an example with the following weighted graph:

We run the code as so:

1adjacency_list = {

2 ’A’: [(’B’, 1), (’C’, 3), (’D’, 7)],

3 ’B’: [(’D’, 5)],

4 ’C’: [(’D’, 12)]

5}

6graph1 = Graph(adjacency_list)

7graph1.a_star_algorithm(’A’, ’D’)

8

And the output would look like:

Path found: [’A’, ’B’, ’D’] [’A’, ’B’, ’D’]

Thus, the optimal path from A to D, found using A*, is .

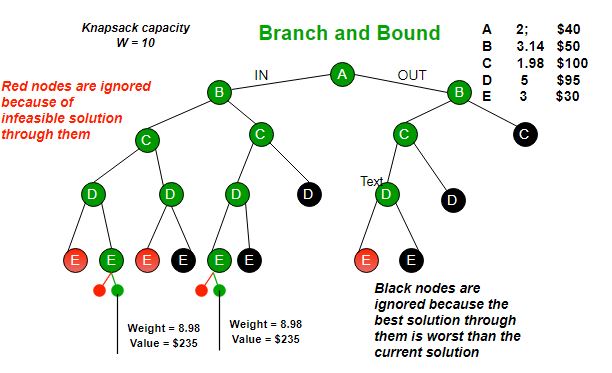

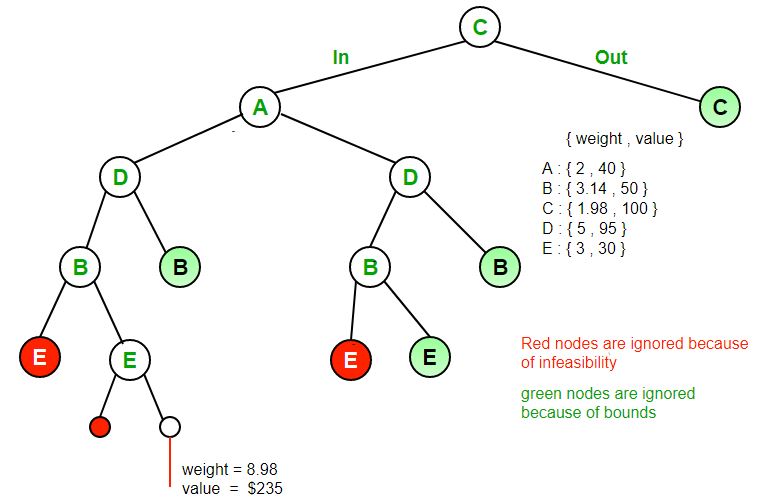

Branch and bound (BB, B&B, or BnB) is an algorithm design paradigm for discrete and combinatorial optimization problems, as well as mathematical optimization. A branch-and-bound algorithm consists of a systematic enumeration of candidate solutions by means of state space search: the set of candidate solutions is thought of as forming a rooted tree with the full set at the root. The algorithm explores branches of this tree, which represent subsets of the solution set. Before enumerating the candidate solutions of a branch, the branch is checked against upper and lower estimated bounds on the optimal solution, and is discarded if it cannot produce a better solution than the best one found so far by the algorithm. The algorithm depends on efficient estimation of the lower and upper bounds of regions/branches of the search space. If no bounds are available, the algorithm degenerates to an exhaustive search.

The method was first proposed by Ailsa Land and Alison Doig whilst carrying out research at the London School of Economics sponsored by British Petroleum in 1960 for discrete programming, and has become the most commonly used tool for solving NP-hard optimization problems. The name “branch and bound” first occurred in the work of Little et al. on the traveling salesman problem.

The goal of a branch-and-bound algorithm is to find a value x that maximizes or minimizes the value of a real-valued function , called an objective function, among some set S of admissible, or candidate solutions. The set is called the search space, or feasible region. The rest of this section assumes that minimization of is desired; this assumption comes without loss of generality, since one can find the maximum value of by finding the minimum of . A B&B algorithm operates according to two principles:

It recursively splits the search space into smaller spaces, then minimizing f(x) on these smaller spaces; the splitting is called branching.

Branching alone would amount to brute-force enumeration of candidate solutions and testing them all. To improve on the performance of brute-force search, a B&B algorithm keeps track of bounds on the minimum that it is trying to find, and uses these bounds to ”prune” the search space, eliminating candidate solutions that it can prove will not contain an optimal solution.

Turning these principles into a concrete algorithm for a specific optimization problem requires some kind of data structure that represents sets of candidate solutions. Such a representation is called an instance of the problem. Denote the set of candidate solutions of an instance I by SI. The instance representation has to come with three operations:

branch(I) produces two or more instances that each represent a subset of SI. (Typically, the subsets are disjoint to prevent the algorithm from visiting the same candidate solution twice, but this is not required. However, an optimal solution among SI must be contained in at least one of the subsets.)

bound(I) computes a lower bound on the value of any candidate solution in the space represented by I, that is, for all x in SI.

solution(I) determines whether I represents a single candidate solution. (Optionally, if it does not, the operation may choose to return some feasible solution from among SI.) If solution(I) returns a solution then f(solution(I)) provides an upper bound for the optimal objective value over the whole space of feasible solutions.

Using these operations, a B&B algorithm performs a top-down recursive search through the tree of instances formed by the branch operation. Upon visiting an instance I, it checks whether bound(I) is greater than an upper bound found so far; if so, I may be safely discarded from the search and the recursion stops. This pruning step is usually implemented by maintaining a global variable that records the minimum upper bound seen among all instances examined so far.

The following is the skeleton of a generic branch and bound algorithm for minimizing an arbitrary objective function f . To obtain an actual algorithm from this, one requires a bounding function bound, that computes lower bounds of f on nodes of the search tree, as well as a problem-specific branching rule. As such, the generic algorithm presented here is a higher-order function.

Using a heuristic, find a solution xh to the optimization problem. Store its value, . (If no heuristic is available, set B to infinity.) B will denote the best solution found so far, and will be used as an upper bound on candidate solutions.

Initialize a queue to hold a partial solution with none of the variables of the problem assigned.

Loop until the queue is empty:

Take a node N off the queue.

If N represents a single candidate solution x and , then x is the best solution so far. Record it and set .

Else, branch on N to produce new nodes . For each of these:

If , do nothing; since the lower bound on this node is greater than the upper bound of the problem, it will never lead to the optimal solution, and can be discarded.

Else, store on the queue.

Several different queue data structures can be used. This FIFO queue-based implementation yields a breadth-first search. A stack (LIFO queue) will yield a depth-first algorithm. A best-first branch and bound algorithm can be obtained by using a priority queue that sorts nodes on their lower bound. Examples of best-first search algorithms with this premise are Dijkstra’s algorithm and its descendant A* search. The depth-first variant is recommended when no good heuristic is available for producing an initial solution, because it quickly produces full solutions, and therefore upper bounds.

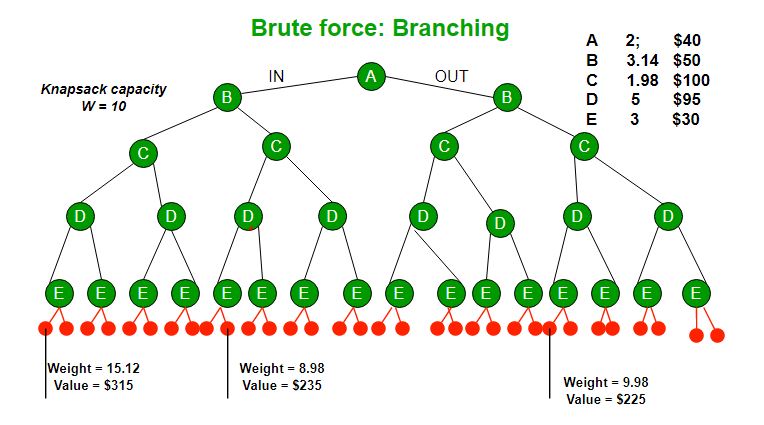

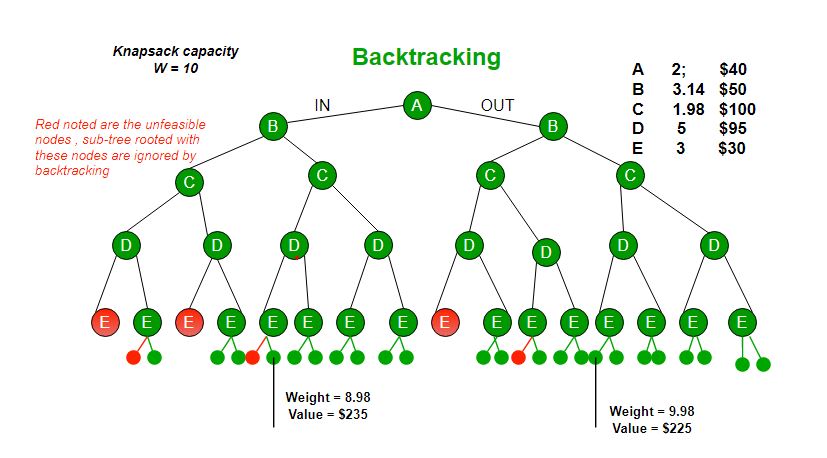

Branch and bound is an algorithm design paradigm which is generally used for solving combinatorial optimization problems. These problems are typically exponential in terms of time complexity and may require exploring all possible permutations in worst case. The Branch and Bound Algorithm technique solves these problems relatively quickly. Let us consider the 0/1 Knapsack problem to understand Branch and Bound. There are many algorithms by which the knapsack problem can be solved:

Greedy Algorithm for Fractional Knapsack

DP solution for 0/1 Knapsack

Backtracking Solution for 0/1 Knapsack.

Let us consider below 0/1 Knapsack problem to understand Branch and Bound. Given two integer arrays and that represent values and weights associated with n items respectively. Find out the maximum value subset of such that sum of the weights of this subset is smaller than or equal to Knapsack capacity .

Let us explore all approaches for this problem.